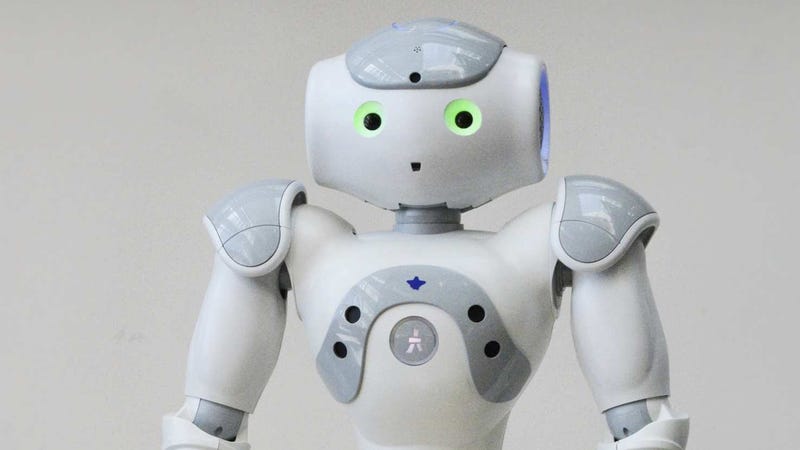

A SoftBank Robotics Nao humanoid robot used in the studyImage: Vollmer et al., Sci. Robot. 3, eaat7111 2018

A SoftBank Robotics Nao humanoid robot used in the studyImage: Vollmer et al., Sci. Robot. 3, eaat7111 2018

New research shows that children are more likely than adults to give in to peer pressure from robots, a disturbing finding given the rapidly increasing rate at which kids are interacting with socially intelligent machines.

An experiment led by Anna-Lisa Vollmer from Bielefeld University in Germany is a potent reminder that modern technologies can have a profound effect on children, influencing the way they think and express opinions—even when they know, or at least suspect, their opinions are wrong.

The point of Vollmer’s experiment was to measure the social impact exerted by robots onto both children and adults, particularly the way in which peer pressure from robots might contribute to social conformity. The results, published today in Science Robotics, shows that adults are largely immune to robotic influence, but the same cannot be said of children, who conformed to the opinions of a robotic peer group, even when those opinions were clearly wrong. This research means we need to keep a close eye on the social effects exerted by robots and AI onto young children—an increasingly important issue given the frequency with which children are interacting with social machines.

Vollmer’s team used a well-established technique developed by the psychologist Solomon Asch, now known as the Asch paradigm. This technique measures how people conform to others during a simple visual judgment task. In this case, Vollmer asked her participants to match a set of vertical lines on a computer screen. The test is not hard, as it’s easy to discern which two lines match each other in size. The point is not to test visual acuity, but to assess the ability of participants to resist conformity when their peers provide the wrong answer.

For the new study, Vollmer tested both adults and children. A total of 60 adults were divided into three groups: the alone group (the control), a group involving three other human peers, and a group consisting of three robots (the SoftBank Robotics Nao humanoid robot was used for the experiment). In two of every three tests, all three members of both peer groups (the human and robotic peers) unanimously gave the wrong answer. Consistent with other studies, adults often conformed to the opinions of their human peers, even when the answers were blatantly, obviously wrong. But the adults were not persuaded by the peer pressure exerted by the social robots, resisting the incorrect answers spouted by the machines. Interestingly, this result contradicts the “computers as social actors” (CASA) hypothesis, which states that, in the words of the study’s authors, “people naturally and unconsciously treat computers and other forms of media in a manner that is fundamentally social, attributing human-like qualities to technology.”

The same experiment was done with 43 children between the ages of seven and nine. The test was identical to the one given to the adults, except there was no human peer pressure group; it’s already very well established that kids are more susceptible to social influence. In this case, the researchers wanted to focus exclusively on the influence of robotic peers. Results showed that, unlike adults, the children were “significantly influenced” by the presence of robot peers, providing identical incorrect responses nearly 75 percent of the time.

A possible explanation is that the children were merely reacting to the novelty of the situation, that is, having to share a social space with robots. But “this criticism holds no ground,” the researchers write, because there was no accuracy decrease observed in the control group. Another possibility is that the children felt intimidated by the presence of the adult experimenter, who was present in the room during the task. Again, the researchers disagree, saying “this still suggests that the robots exerted peer pressure and does not invalidate the observations and conclusions,” adding that robots “are likely to be owned by someone, people, or organizations and might as such be proxies for indirect social peer pressure.”

This observation—that robots have the apparent power to induce conformity in children—is important to acknoweldge. As the researchers write in the study:

In this light, care must be taken when designing the applications and artificial intelligence of these physically embodied machines, particularly because little is known about the long-term impact that exposure to social robots can have on the development of children and vulnerable sections of society. More specifically, problems could originate not only from intentional programming of malicious behavior (e.g., robots that have been designed to deceive) but also from the unintentional presence of biases in artificial systems or the misinterpretation of autonomously gathered data by a learning system itself. For example, if robots recommend products, services, or preferences, will compliance and thus convergence be higher than with more traditional advertising methods?

Subsequently, the researchers recommend ongoing discussions on the matter, such as protective measures like product regulations to minimize risks to children during social child-machine interactions.

No doubt, as socially intelligent robots and AI become more powerful and more widespread, the potential harm to children follows suit. This study shows that children don’t have the intellectual faculty or emotional strength to resist the influence exerted by these technologies. It’s a sobering finding, one definitely requiring further contemplation and action.

[Science Robotics]