Immediately when Dr. Blake Richards heard about deep learning, he realized that he was faced not only with a method that revolutionairy artificial intelligence. He realized that looking at something fundamental from the human brain. It was the beginning of the 2000s, and Richards conducted a course at the University of Toronto along with Geoff Hinton. To Hinton, who was one of the originators of the algorithm, conquer the world, offered to read introduction to his method of teaching inspired by the human brain.

The key words here are “inspired by the brain.” Despite the conviction of Richards, bet played against him. The human brain, as it turned out, has important functions, which is programmable in the algorithms of deep learning. On the surface these algorithms violate basic biological facts has already been proven by neuroscientists.

But what if deep learning and the brain actually compatible?

And now, in a new study published in eLife, Richards, working with DeepMind, proposed a new algorithm based on the biological structure of neurons in the neocortex. The cortex, the cerebral cortex, is home to the highest cognitive functions such as reasoning, predicting and flexible thinking.

The team joined the artificial neurons in a layered network, and set to it the task of the classical computer vision is to identify handwritten digits.

The new algorithm did well. But what is more important: he analyzed the samples for training, as do algorithms for deep learning, but was built entirely on the fundamental biology of the brain.

“Deep learning is possible in a biological structure,” the scientists concluded.

Because at the moment this model is a computer version, Richards hopes to pass the baton to experimental neuroscientists, which could help you to check whether this algorithm in a real brain.

If Yes, the data can be transferred to computer scientists to develop massively parallel and efficient algorithms that will work on our cars. This is the first step towards the merging of the two fields in the “virtuous circle dance” of discovery and innovation.

The search for a scapegoat

Although you’ve probably heard that artificial intelligence has recently beaten the best of the best go, you are unlikely to know exactly how the algorithms work based on this artificial intelligence.

In a nutshell, deep learning based on an artificial neural network with a virtual “neurons”. As tallest skyscraper, the network is structured in a hierarchy: low-level neurons processing the input, for example, horizontal or vertical bars that form the digit 4, and neurons treated with a high-level abstract aspects of the number 4.

To train the network, you give her examples of what you’re looking for. The signal propagates through the network (up the stairs of the building), and each neuron is trying to perceive something fundamental in the work of the Quartet.

As children learn something new, the first network is not fairing very well. She gives everything that, in her opinion, looks like a number four and get in the spirit of Picasso.

But it is the story of learning: the algorithm compares the output with the ideal input and calculates the difference between them (read: mistakes). The error is “back propagated” through the network, teaching each neuron, they say, is not what you are looking for, look better.

After millions of examples and repetitions, the network starts to work perfectly.

The error signal is extremely important for learning. Without an effective “back-propagation” network will not know which of its neurons are wrong. In search of a scapegoat artificial intelligence improves itself.

The brain does too. But how? We have no idea.

Biological dead end

The obvious alternative: a solution with deep training is not working.

Back propagation of error is an extremely important function. It requires a certain infrastructure to work properly.

First, each neuron in the network should receive the error notification. But in the brain the neurons are connected only with several partners at the downstream (if not connected). To reverse the spread worked in the brain, neurons at the first levels should perceive the information from the billions of connections in descending channels — and this is biologically impossible.

And while some deep learning algorithms adapt the local form of error back-propagation is essentially between neurons — it requires that the connection is forward and backward is symmetrical. In the synapses of the brain this does not happen almost never.

More modern algorithms adapt a different strategy, implementing a separate feedback path, which helps neurons find errors locally. Although it is more feasible biologically, the brain doesn’t have a separate network dedicated to the search for scapegoats.

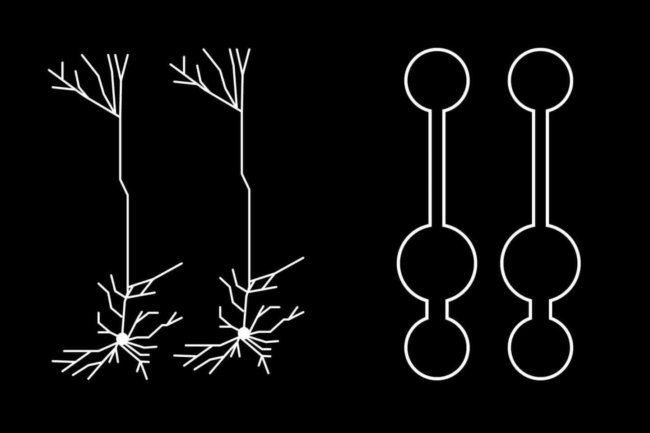

But it also has neurons with complex structures, in contrast to the homogeneous “balls”, which are currently used in deep learning.

Branching network

Scientists draw inspiration from pyramidal cells that populate the cortex of the human brain.

“Most of these neurons are shaped like trees, their roots go deep into the brain, and “the branches” come to the surface,” says Richards. “Interestingly, the roots get some input sets and branches the others.”

Curiously, however, the structure of neurons often is “exactly how to” for efficiently solving computational problems. Take, for example, processing of sensations: the bottom of the pyramidal neurons are located where you need, to get touch input, and the tops are well-positioned for transmission errors through feedback.

Can this complex structure to be an evolutionary solution to combat the wrong signal?

Scientists have created a multilayered neural network based on the previous algorithms. But instead of homogeneous neurons they resemble the neurons of the middle layers sandwiched between the input and output — similar to the real thing. Learning handwritten digits, the algorithm proved to be much better than the single network, despite the absence of classical error back-propagation. The cell structure was able to identify the error. Then, at the right time, the neuron combines both a source of information for finding the best solutions.

This has a biological basis: neuroscientists have long known that the input branches of the neuron carry out local calculations that can be integrated with signal back-propagation from the output branches. But we don’t know whether the brain works in reality — therefore, Richards instructed the neuroscientists to find out.

Moreover, this network handles a problem similar to the traditional method of deep learning to read: uses a multilayer structure to extract progressively more abstract ideas about each number.

“It is a feature of deep learning,” explain the authors.

Deep learning brain

Without a doubt, this story will be more twists and turns because computer scientists make more and more biological detail in the algorithms AI. Richards and his team consider the predictive function from the top down, when the signals from higher levels directly affect how lower levels respond to the input.

Feedback from upper levels not only improves the signalling of errors; it also can encourage neurons to low-level processing to work “better” in real time, says Richards. Until the network has surpassed other non-biological networks deep learning. But it does not matter.

“Deep learning has had a huge impact on AI, but to date its impact on the neuroscience was limited,” say the authors of the study. Now neuroscientists will have the occasion to conduct a pilot test and learn is the structure of the neurons in the base of the natural algorithm of deep learning. Perhaps in the next ten years will begin a mutually beneficial data exchange between neuroscientists and researchers in artificial intelligence.

Does our brain deep learning for understanding the world?

Ilya Hel