Perhaps one of the most challenging tests of machine intelligence was a chess game that took place almost 20 years ago between the computer Deep Blue and world chess champion Garry Kasparov. Machine won. At the moment, ended a series of games in a logical game of go, which competed AI AlphaGo from DeepMind (Google owner) and legendary champion for the game in th from China Li si Dol. In four of five games won by the machine, showing its superiority over man in this game. Incredibly complex game between human and AI shows that machine intelligence has developed greatly. It seems that fateful day, when cars really will become smarter than man, now closer than ever. However, it seems that many do not understand, and perhaps even mistaken in respect to those consequences that we can expect in the future.

We really appreicate some very serious and even dangerous consequences for the development of artificial intelligence. Last year, the co-founder of the company SpaceX Elon Musk made a statement in which he expressed concern at the expense of a possible takeover of the world by artificial intelligence, which in turn caused a huge number of comments, both supporters and opponents of this opinion.

For such a fundamentally revolutionary future that we can expect, the surprise is a huge number of today’s controversy about whether it will happen at all and how it will ultimately turn out. Especially strange is the denial of the incredible benefits that we can get when you create a really smart AI, of course, taking into account all possible risks. All of these questions is incredibly difficult to receive the right answers, because the AI, unlike any other human inventions, can really “rejuvenate” it’s the mankind or to destroy it completely.

It is difficult to understand what to believe and what to hope for. However, thanks to the pioneers of computer science, neuroscientists and theorists of development of AI, is slowly beginning to get a clear picture. Below is a list of the most common misconceptions and myths in relation to artificial intelligence.

We will never create AI with human intelligence

Reality, in turn, suggests that we already have computers that meet, and even surpass human abilities in some areas. Take chess or game of go, trade on stock exchanges or the role of a virtual partner. Computers and algorithms that run them, with time will only get better, and it’s only a matter of time until they are compared with human capabilities.

A researcher from new York University Gary Marcus once said that “almost all” who work with the field of AI believe that machines will surpass us one day:

“The only debate between supporters and skeptics is the time frame of this event”.

Futurologists such as ray Kurzweil believe that this event may occur in the coming decades, while others say that it will take a few centuries.

Skeptics AI unconvincing in his evidence that the creation of artificial intelligence as something unique and very similar to a real live human brain in terms of technology is somewhere beyond reality. Our brain is also a machine. Biological machine. It exists in the same world and subject to the same laws of physics as everything else. And with time we will unravel the whole principle of its work.

The AI will have consciousness

There is a common view that machine intelligence will be conscious. That is, the AI will think like this man does. In addition, some critics, for example, the co-founder of Microsoft Paul Allen believes that because of incomplete theoretical framework, describing the mechanisms and principles of consciousness, we still haven’t created even artificial General intelligence, i.e. intelligence that can perform any intellectual task, they can overpower people. However, according to Murray Shanahan, Professor of the faculty of cognitive robotics at Imperial College London, we should not equate these two concepts.

“Consciousness is definitely very interesting and important subject of research, but I do not think that consciousness should be an obligatory attribute of humanoid artificial intelligence,” says Shanahan.

“By and large, we use the term “consciousness” only for the indication of several psychological and cognitive attributes that are usually linked in man”.

It is realistic to imagine a very clever machine that doesn’t have one or more of these attributes. Someday we can build a really incredibly smart AI, but lacking ability of self-awareness, and subjective and conscious understanding of the world. Shanahan notes that the Association of intelligence and consciousness inside the machine will still be possible, but we must not miss the fact that these are two completely separate from each other concepts.

And although one of the options “the Turing Test”, in which the machine showed that she was no different from a person who was successfully achieved, this does not mean that this machine has consciousness. With our (human) point of view, advanced artificial intelligence might seem to us as something with consciousness, but the machine itself will recognize itself no more than the same stone or a calculator.

We should not fear AI

In January of this year the founder of Facebook, mark Zuckerberg shared his thoughts about what we shouldn’t fear AI, adding that this technology can bring huge benefits around the world. The truth is that he is only partly right. We really can get amazing benefits, with AI (ranging from driverless cars, to new discoveries in medicine), but no one can guarantee that each application AI will definitely benefit.

Highly intelligent system, perhaps, would know everything required for certain tasks (for example, resolution of complex global financial situation or the hacking of computer systems of the enemy), but outside of highly specialized tasks, the potential AI is still absolutely unclear, and therefore potentially dangerous. For example, the system company DeepMind specializes in the game of go, however, she had no opportunities (and reasons) to explore the areas outside of this sphere.

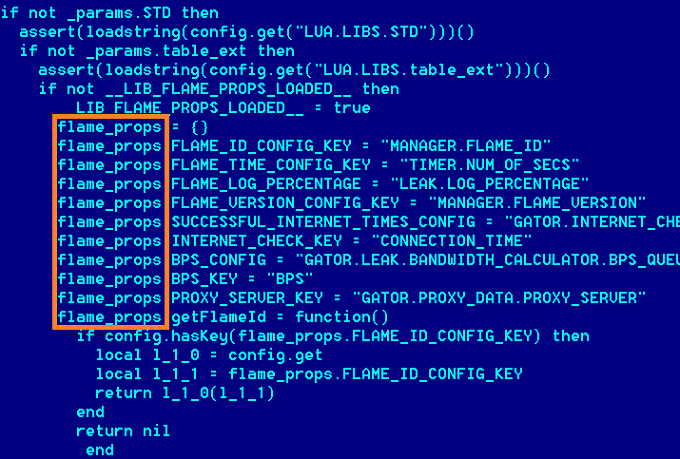

Computer virus Flame, whose task was to monitor middle Eastern countries

Many of these systems can carry serious security threat. A good example is powerful and very cunning virus Stuxnet, militarized “worm” created by American and Israeli forces to infiltrate and attack Iran’s nuclear plants. Only this malware somehow (accidentally or deliberately) struck in supplements and one of Russian nuclear plants.

Another example is the Flame virus designed for targeted cyber espionage in the middle East. It is easy to imagine as the “future versions” of Stuxnet and Flame will go beyond their assigned tasks and will be able to infect almost the entire infrastructure of a country. And do it very quietly.

Faux sverginare be too smart to make mistakes

Mathematician, researcher in artificial intelligence and founder of the robotics company Surfing Samurai Robots Richard Lusmore believes that most of the doomsday scenarios involving the AI seem unlikely, because all in General, they are based on the fact that AI will one day say: “I understand that the destruction of people is a mistake in my code, but I’m still forced to follow the task”.

Lasmar believes that if the AI will behave according to this scenario, it will face the logical contradictions that will put before him the whole of his accumulated knowledge base and will lead eventually to his own sense of its folly and futility.

The researcher also believes that those people who say “the AI will only do things that will be incorporated in its programme,” err, just as those people who once said the same words, but in the direction of the computers stating that the computer system will never have the versatility.

Peter McIntyre and Stuart Armstrong, both working in the Institute for the future of humanity at Oxford University, in turn, disagree with this view, arguing that AI will be on a mandatory and largely justified the program code. Scientists do not believe that AI will never make mistakes or, on the contrary, are too stupid to understand what it is we want from them.

“By definition artificial sverginare (CII) is an agent, whose intelligence will surpass many times the best minds in almost all areas,” says McIntyre.

“He definitely will understand what we are going to want.”

The McIntyre and Armstrong believe that AI will perform only those tasks for which it was programmed, however, if somehow it will evolve, it is likely he will try to figure out how his actions would differ from the human tasks and the underlying laws.

Mclntyre compares the future position of people faced the mice. Mice have a strong instinct of searching for food and shelter, but their goals are very often in conflict with a man who doesn’t want to see them in the home.

“Like our knowledge of mice and their desires, the system of super-intelligence can also know all about us and know what we want, but it will be completely indifferent to our wishes”.

A simple solution will eliminate the problem of control AI

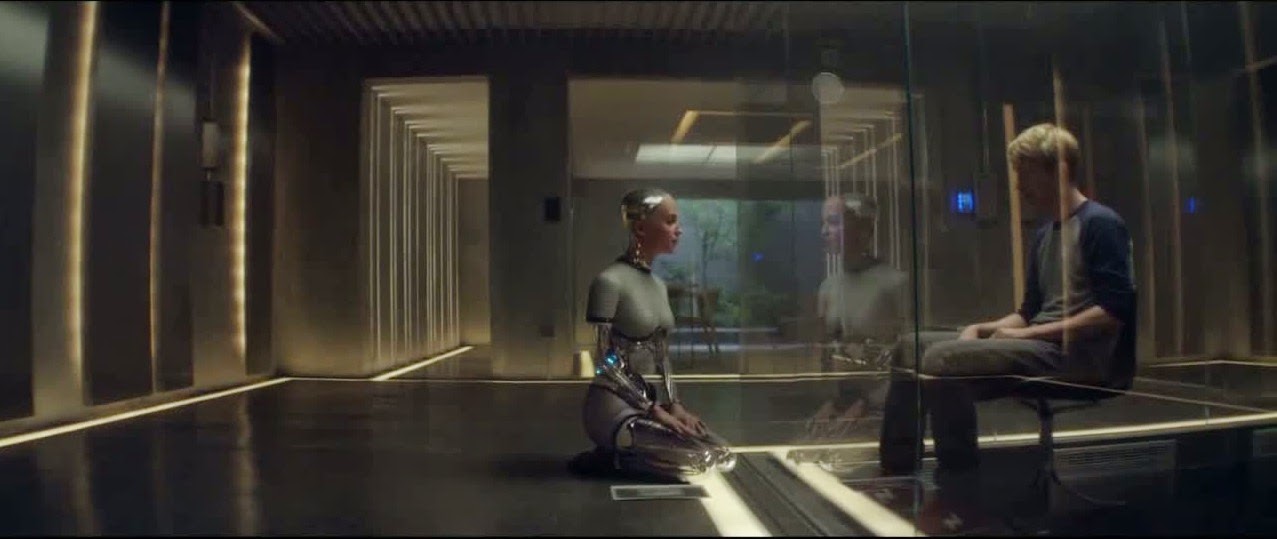

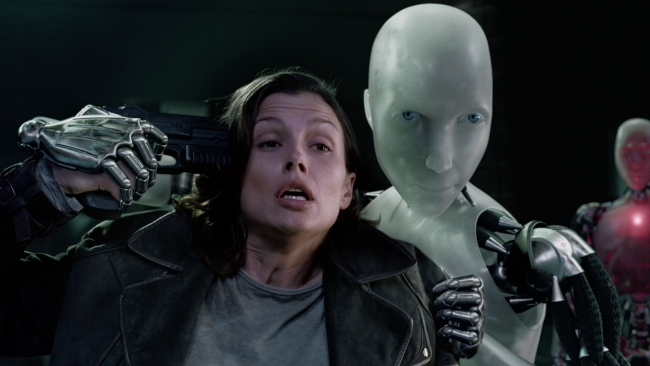

As shown in the movie “From the machine”, it will be very difficult to control AI that will be smarter than us

Assuming that one day we will create sverginare that will be smarter than people, then we will be faced with a serious problem, a problem of control. Futurists and theorists of artificial intelligence is still unable to explain how we can manage and keep the ISI after its creation. It is also unclear how we can assure that it will be friendly towards people. More recently, researchers from the Georgia Institute of Technology (USA) naively assumed that the AI will be able to learn and to imbibe the human values and knowledge of social norms by simple reading simple fairy tales. Yes, simple children’s fairy tales and stories that us parents read as a child. But in reality all will be much more difficult this.

“There have been many so-called “solutions” in the control of artificial intelligence,” says Stuart Armstrong.

One example of such solution could be the programming of the ICI so that he was constantly trying to impress or please man. An alternative would be the incorporation in the source code of such concepts as love or respect. And in order to avoid such development of a scenario where the AI will be able to simplify all of these concepts and to perceive the world through the prism of these oversimplified concepts, dividing it only into black and white, it can be programmed on the understanding and acceptance of intellectual, cultural and social diversity.

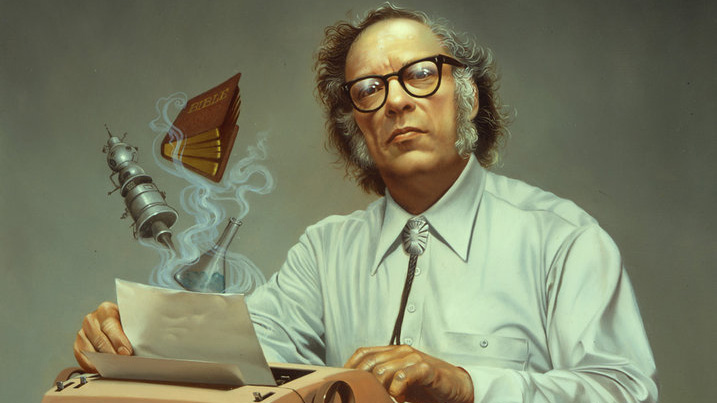

The three laws of robotics created by Isaac Asimov, fit perfectly into the concept of fiction, but in reality we need something more comprehensive to address the problems of control

Unfortunately, these solutions are too simple and look like an attempt to fit the complexity of human likes and dislikes under one umbrella definition or concept, or an attempt to fit the complexity of human values in one word, phrase or idea. For example, try to accommodate in this framework a consistent and adequate definition for such concepts as “respect”.

“Of course, we should not think that such simple options are completely useless. Many of them offer an excellent occasion to reflect, and perhaps stimulate the search for solutions to end the problems,” says Armstrong.

“But we can’t rely solely on them without more comprehensive work, without clear studies and search effects from the use of those or other decisions”.

We will be destroyed by artificial super-intelligence

No one can guarantee that the AI will destroy us one day, and no one can say with certainty that we will not be able to find ways to control and use AI for their own purposes. As once said an American expert on artificial intelligence Eliezer Yudkovskaya: “AI cannot love or hate you, but you are made of atoms, which he could use for something else”.

Oxford philosopher Nick Bostrom in his book “Sverginare: paths, dangers and strategies” writes that the real swarchitect will one day be able to realize that will make him more dangerous than any ever produced by human inventions. Such prominent contemporary thinkers like Elon Musk, Bill gates and Stephen Hawking (many of whom believe that “creating AI could be the most terrible mistake in human history”), largely agrees with this view and are already sounding the alarm.

Peter McIntyre believes that for most tasks that swarchitect will be able to ask ourselves, people will look like a unnecessary link.

“AI will one day be able to come to the conclusion — and it should be noted that it will calculate it quite correctly — that people don’t want it to maximize the profitability of any particular company at any price, regardless of the consequences for consumers, the environment and living beings. So he will have a huge incentive to develop a plan and a strategy by which an individual can not prevent the solution of the problem, by changing or even disabling the AI”.

According to MacIntyre, if the tasks AI will be directly opposite to our own, it’ll give him good reasons for not allowing us to stop him. And when you consider that the level of his intelligence would be far above our own, to stop it really will be very difficult, if not impossible.

However, it is safe to say anything is impossible now, and no one can say what kind of form AI, we have to deal, and how it can threaten humanity. As once said Elon Musk, the AI actually can be used to manage, track and control other AI. And maybe it even will be implemented the human values and the initial friendliness to people.

Faux swarchitect will be friendly

The philosopher Immanuel Kant believed that intelligence is highly correlated with morality. In his work “the Singularity: a philosophical analysis” neuroscientist David Chalmers took as the basis of the famous idea of Kant and tried to apply it to analyze the emergence of artificial super-intelligence.

“If described in this work, the principles are correct, along with the sharp development of AI we can expect a dramatic development of moral principles. The further development will lead to systems ISI, which will have cormorant and super-intelligence. Therefore, we should expect only friendly qualities with them.”

The truth is that the idea of an advanced AI with the principles of morality and of exceptional virtue is not tenable. As Armstrong indicates, the world is full of smart war criminals. Intelligence and morality in man, for example, are not connected, so the scientist casts doubt that such a relationship will exist among other forms of intelligence.

“Smart people who are behaving immorally, usually create far more problems and pain than their less intelligent counterparts. Intelligence enables them to be more sophisticated in their bad than good behavior,” says Armstrong.

Mclntyre explained that the agent’s ability to achieve its objectives, nothing to do with what exactly this task is.

“We are very lucky if our AI will be more moral and not just smart. Relying on luck is, of course, the last case in this matter, but, perhaps that luck will determine our position in the future,” says the researcher.

The risks associated with AI and robotics, are of the same nature

This is especially common misconception that is used in the media and in Hollywood blockbusters like “Terminator”.

If artificial cornicelli, such as Skynet, for example, really wants to destroy all humanity, it is unlikely that he would use paramilitary androids with machine guns in each hand. His cunning and efficiency of thinking will allow him to understand that it is much more convenient to use, say, a new kind of biological plague, or perhaps some kind of nanotechnological catastrophe. Or maybe he’ll just destroy the atmosphere of our planet. AI is potentially dangerous not just because its development is closely linked with the development of robotics. The reason for its potential danger lies in the methods and means by which he will be able to tell the world about your presence.

AI, shown in science fiction, are a reflection of our future

Without a doubt, for many years writers and fiction writers use the medium of science fiction as a springboard to the real future of our assumptions, but the actual creation of the ISI and the real consequences of this are still beyond the horizon of our real knowledge. Moreover, such an artificial and obviously inhuman nature of AI cannot even with any degree of precision to assume that this AI will look like actually.

In most science fiction AI is most similar to humans.

“Before us is actually a whole spectrum of various possible types of mind. Even if you take only the human species. For example, your mind is not identical to the mind of your neighbor. But this comparison is just a drop in the ocean of all possible diversity of minds that could exist,” says McIntyre.

Most sci-Fi works have been created, of course, primarily in order to tell the story, not to be as convincing from a scientific point of view. If it was Vice versa (science is more important than the plot), it is possible to monitor such works would be interesting.

“Just imagine, no matter how boring were all these stories, where the AI, without consciousness, of the opportunity to enjoy, to love or to hate, destroys all the people almost without any resistance to achieve his goal, which, incidentally, also might not be very interesting for the reader or viewer,” comments Armstrong.

The AI will take our jobs

The AI’s ability to automate those processes that we do manually, and its potential ability to destroy all of mankind are not one and the same. However, according to Martin Ford, author of the book “dawn of the robots: technology and the threat of jobless future” (“Rise of the Robots: Technology and the Threat of a Jobless Future”), these terms are very often trying to compare and combine into a single unit. Of course, it’s great that we are trying to anticipate the implications of AI so far, but only if these efforts will not distract us from the problems we can meet in a couple decades if you don’t do something. And one of the main problems is mass automation.

Few would argue that one of the goals of artificial intelligence will be the search path for automation of many jobs, ranging from factory jobs and posts ending with some “white collar”. Some experts predict that half of all jobs, at least in the U.S. will be hit by automation in the near future.

But this does not mean that we will not be able to accept these changes. It is important to understand that the desire to relieve yourself from unnecessary physical and psychological stress at work actually has long been the dream of our human species.

“In a few decades AI will really change people in many workplaces. And it’s actually very good. For example, driverless cars will replace truckers, which, in turn, would not only reduce the cost of delivery of goods, but it will also reduce the cost of the goods themselves. If you’re a trucker, you will certainly remain extreme, but all else in this case, rather, will receive an increase to their salaries. And the more money you can save, the more money can be spent on other goods and services that have at their jobs are people”.

Likely that artificial intelligence will seek new ways to well-being and to feed the people while they will do other things. Progress in the development of AI will be accompanied by success in other areas. Particularly in the productive sectors. In the future, it is rather very much simplify, not complicate the satisfaction of our basic needs.