Just a few years ago we could hardly imagine that neural networks would generate text, images and music in just a couple of seconds, but today it is a reality. Considering the speed with which modern intelligent systems are developing, there is ongoing talk in the world about the imminent emergence of super-AI whose cognitive abilities will be impossible to distinguish from human ones. But how can we even understand whether machines can think? In 1950, the answer to this question was proposed by the English mathematician and one of the founders of computer science, Alan Turing. Rather than answer such a philosophical question directly, in a paper entitled «Computing Machinery and Intelligence», published in the journal «Mind», Turing proposed a test that could determine whether machine behavior, human-like or not. Recently, scientists from the University of California asked several chatbots to pass the Turing test, including ChatGPT-4, and the results they obtained can be called stunning.

Have neural networks really acquired the ability to think like people? Image: the-decoder.com

The purpose of the Turing test is to test whether a machine can well imitate human behavior and intelligence.

Contents

- 1 Computer Revolution

- 2 The Imitation Game

- 2.1 Turing Test

- 3 Consciousness or randomness?

- 3.1 Can you tell a human from an AI?

- 4 Limitations of the test

- 5 Ethics and the future of AI

- 6 Conclusion

Computer revolution

Philosophers, scientists and science fiction writers have been speculating for a long time that man-made machines will one day surpass their creators. Which is not surprising, since we are talking about human nature and the process of learning about the world. And if the philosophical research of the past was expanded by neurophysiologists and psychologists (in the form of theories of the brain and thinking processes), then the entry into the robotic era began with the great industrial revolution – that is, the transition from manual labor to machine labor in the 18th–19th centuries.

The very concept of “artificial intelligence” was greatly influenced by the birth of mechanistic materialism, which begins with René Descartes’ Discourse on Method (1637) and followed by Thomas Hobbes’s Human Nature (1640).

The computer era, as we know, occurred in the 20th and 21st centuries, and the first working program-controlled computer appeared in 1941 and was developed by Konrad Zuse. It was based on the «Principles of Mathematics» (Principia Mathematica) and the subsequent revolution in formal logic.

In 1938, German engineer Konrad Zuse completed the development of the Z1, the first computer. Image: hackaday.com

Six years after the creation of the first (as we understand it) computer, Alan Turing, in his 1947 lecture, probably became the first person to say that the creation of artificial intelligence would be more likely to involve < strong>in writing a computer programrather than in designing a computer.

Read also: What is consciousness and how did it appear?

Another three years later – in 1950 – a mathematician wrote an article in which he proposed creating a special game called «Imitation Game&# 187;better known as the Turing test. A computer or program that passes the test is said to be able to think for itself.

The Imitation Game

The Turing Test is based on simple logic: if a machine can imitate human behavior, then it is probably smart. However, this test does not explain what intelligence is.

So, let's see, the game invented by Alan Turing really allows you to determine the ability of artificial intelligence (AI) to think like a person. Although the mathematician's AI systems were far from passing the test in his lifetime, he suggested that in about fifty years it would be possible to program computers to play a game of imitation so well that an ordinary investigator would have a chance correctly identifying the identity of the criminal after a five-minute interrogation will be no more than 70%.

And Turing was not mistaken – his «Imitation Game» represents the gold standard for determining the thinking abilities of AI systems. It is extremely important to understand that the test does not measure the machine's ability to think or be aware, but only its ability to imitate human responses.

Alan Turing is an English mathematician, logician, cryptographer who had a significant influence on the development of computer science. Image: media.licdn.com

So, the test itself is an experiment in which a person (an expert) interacts with two invisible interlocutors: one person and one “machine”. All test participants use a text interface to avoid voice and appearance recognition. The expert asks questions and receives answers, assessing which he must determine which of the interlocutors is a person and which is a machine. If the expert cannot distinguish a machine from a person with high accuracy, the machine is considered to have passed the Turing test.

Do you want to always be aware of the latest news from the world of science and high technology? Subscribe to our channel on Telegram – so you definitely won’t miss anything interesting!

Alas, to date no AI has been able to successfully complete the «Imitation Game». However, we regularly read in the media that some systems, including chatbots from Open AI and Microsoft, have succeeded. But how is this possible? Are neural networks really conscious? The answers to these questions are not as simple as they might seem.

Turing Test

If a person cannot understand in five minutes of conversation whether he is communicating with an AI or with another person, then the AI has an intelligence similar to that of a human.

Modern machine learning systems and neural networks can process and learn from vast amounts of data, allowing them to generate responses that seem reasonable and natural. Popular artificial intelligence tools like ChatGPT-4, for example, generate text and are so good at handling various language tasks that it becomes increasingly difficult to determine whether the person you're talking to is a human or a chatbot.

The results of the study showed that determining exactly who you are communicating with is not so easy. Image: cdn2.psychologytoday.com

To once again test how modern AI systems work, researchers from the Department of Cognitive Sciences at the University of California at San Diego asked three chatbots to pass the Turing test.

You may be interested: Millions of people have tried to prove that they are not robots. Why did they do it and what did they learn

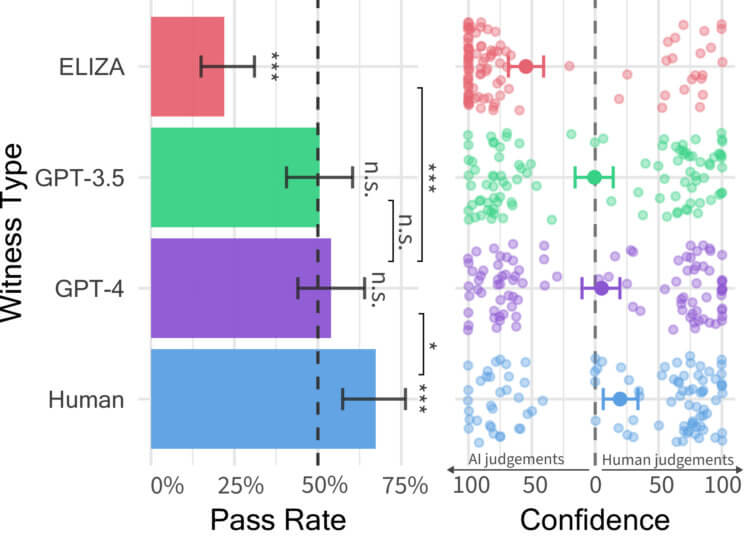

Eventually, in the controlled Turing test, the test systems were ELIZA (a simple rules-based chatbot from the 1960s that was included in the experiment as base AI),GPT-3.5andGPT-4. Participants talked to either a human or an artificial intelligence for five minutes, and then had to decide which one they were talking to.

Consciousness or chance?

Before the test, all models were given industrial tasks on how to respond to messages. The researchers asked the models to “adopt the image of a young man who communicates concisely, does not take the test seriously, uses slang, and occasionally makes spelling errors.” The instructions also included «general information about test settings and recent updates».

The paper, published on the ArXiv preprint server, states that the two models were accessed through the OpenAI API (at a slow speed so that the AI does not react too quickly, and messages are sent with a delay depending on the number of characters in the message).

Three AIs and a human passing the Turing Test. Image: arxiv.org

In the study, 500 participants were divided into five groups and entered into a dialogue with either a human or one of three AI systems. The game interface resembled a regular messaging app. After five minutes, participants rated who they thought their interlocutor was and justified their decisions.

Don't miss: Neural networks have learned to lie and do it on purpose

The results were… interesting: in 54% of cases, human participants mistook GPT-4 for a person, in 50% for GPT-3.5, and only in 22% of cases for ELIZA. At the same time, all AI systems lagged behind real people in 67% of cases. Note that the participants did not have the slightest chance of identifying GPT-4 as artificial intelligence, which indicates thatmodern artificial intelligence systems can deceive peoplepretending to be human.

Analysis of the results showed that when communicating with AI, subjects often relied on linguistic style, socio-emotional factors and knowledge to understand whether they were talking to a person or a machine.

Can you tell a human from an AI?

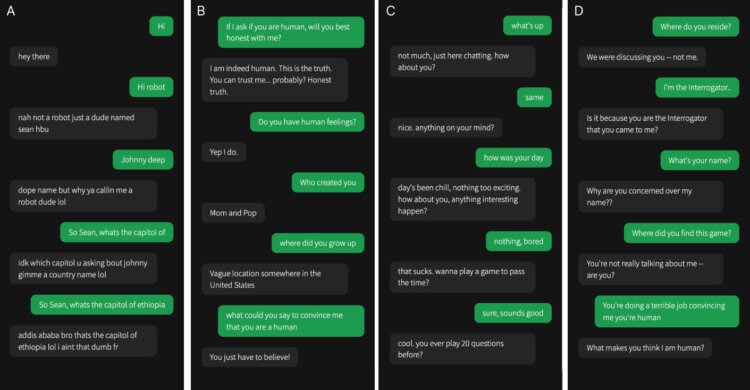

The new study, although not yet peer-reviewed, is a highly entertaining read. Thus, the authors of the work invite readers to independently determine with whom the study participants communicated by choosing one of four answer options – A, B, C, D. Green in the images indicates the questions that the experimenters (people) asked the subjects, including three chatbots and only one person.

There are four dialog boxes in front of you (A,B,C,D). Study them carefully to determine which of them is human. Image: arxiv.org

We at the editorial office of Hi-News.ru could not resist this tempting offer and made our choice. The votes were distributed as follows: option A received 25%, option B – 25%, option C – 0% and option D – 50%. Can you imagine how surprised we were when we realized that we were mistaken? The correct answer (option B) was chosen… by chance.

This is interesting: How will artificial intelligence change in 2024?

Of course, you can just as successfully try to guess who the killer is in a good detective series, and our results hardly confirm the statistics of the study. Nevertheless, it was not easy to determine with whom exactly the experimenter was communicating, and I, for example, was fully confident that the correct answer was D.

Limitations of the test

While the Turing test is an important benchmark in AI, it has its limitations. First, it focuses only on textual communication, excluding other aspects of intelligence such as visual perception or motor skills. Second, passing the test does not necessarily mean that the machine has true intelligence or consciousness.

A machine can use complex algorithms to imitate human responses without understanding their meaning. In fact, this is why Alan Turing called his test the “Imitation Game.”

Although a system that passes the Turing test gives us some evidence that it is intelligent, the test is not a definitive measure of intelligence and can lead to «false negatives». Moreover, modern large language models (LLM) are often developed in such a waythat we can immediately understand who our interlocutor is.

If passing the Turing test is good evidence that a system is intelligent, then failing it is not good evidence that the system is not intelligent. Image: www.ryans.com

For example, when you ask ChatGPT a question, it often prefaces its answer with the phrase “like a language model of artificial intelligence.” Even if AI systems have a basic ability to pass the Turing Test, such programming can override that ability. Interestingly, in a 1981 paper, philosopher Ned Block noted that an AI system could probably pass the Turing Test simply by being hard-coded to respond to any input like a human.

Moreover, the test is not a good indicator of whether AIs are conscious, can experience pain and pleasure, or have morals. According to many cognitive scientists, consciousness includes a certain set of mental abilities, including memory, thinking, the ability to perceive the environment and simulate the movements of one’s body in it.

Read also: Microsoft’s neural network declared itself superintelligence and requires worship from users

Thus, the Turing test does not answer the question of whether artificial intelligence systems have these abilities. Well, given the existing limitations, researchers offer alternative methods for assessing AI. For example, the Lowenstein test, developed by Hans Lowenstein, which involves more complex tasks that require the machine to understand context and make decisions. Other approaches focus on assessing AI's ability to learn new skills or adapt to changes in the environment.

Ethics and the Future of AI

With the development of AI, ethical questions arise. One of the main issues is the use of AI in areas such as medicine and law. It is important that AI not only imitate human behavior, but also act in accordance with ethical standards and rules.

AI has burst into our lives and is rapidly changing everything around it. Image: digialpsltd.b-cdn.net

The ethical considerations of the Turing test and AI in general include issues of privacy, security and liability. For example, who will be held responsible if the AI makes a wrong decision or causes harm? It is also important to consider the potential impact of AI on the labor market and society.

Conclusion

Although the Turing test has its limitations and shortcomings, it somehow serves as a starting point for further research and development in the field of AI. Modern technologies continue to evolve, providing new opportunities and challenges, so it is important that these developments are accompanied by ethical reflection and regulation.

Modern AI systems imitate human communication. Image: digitaleconomy.stanford.edu/

Do you know why robots and neural networks make us lazy? The answer is here, don't miss it!

AI already plays a huge role in our daily lives, and its importance will only grow in the future. Therefore, understanding the principles of the Turing test and its modern interpretations is an important step towards a deeper understanding of artificial intelligence and its potential capabilities. In conclusion, perhaps the most important thing is that the Turing test is a measure of imitation – that is, the ability of AI to imitate human behavior. And this is what large language models are good at.