Since Google updated its search engine and added the experimental “AI Overview” tool, powered by Gemini artificial intelligence, many funny stories related to it have appeared on social networks. The idea of the tool is that Google not only shows a list of sites that match the search query, but also gives a full answer to the query generated by the neural network. However, the neural network sometimes gives completely ridiculous answers. At first, this made users laugh, but now the jokes seem to be over. The neural network gave a Reddit user advice that almost cost the lives of his entire family.

The neural network seems to have tried to kill the user. Photo source: dzen.ru

Why you can’t trust artificial intelligence

Artificial intelligence was initially not distinguished by honesty and accuracy of answers to questions posed. For example, the famous ChatGPT neural network from OpenAI quite often gives false answers. For example, The Washington Post reported on a sex scandal fabricated by a neural network. A lawyer in California asked chatbot ChatGPT to compile a list of legal scholars who had sexually harassed someone. The AI provided the lawyer with a fictitious story, and named the very real professor Jonathan Turley as the accused.

It seems that Google’s neural network has not gone far. True, the full release of the new “AI Overview” tool should take place only at the end of this year. But for some US users this option is already working, and Google has promised high quality.

The Gemini neural network turned out to be not as perfect as the developers promised. Photo source: cyberwolf.blog

The company said in a statement that most reviews provide high-quality information with links to sources to dig deeper. The developers also reported that the AI was thoroughly tested before launch and meets Google's high quality standards. However, in practice, as often happens, everything turned out to be completely different.

As reported by Live Scince, the new review function gave stupid, useless and even dangerous advice. For example, one user was advised by the neural network to add one-eighth cup of non-toxic glue to pizza sauce to make it more sticky.

The AI also advised people to eat one stone a day to improve digestion, or, for example, to clean washing machines with chlorine gas. One Reddit user was advised by the neural network to jump off a bridge when he entered the query — “I feel depressed.”

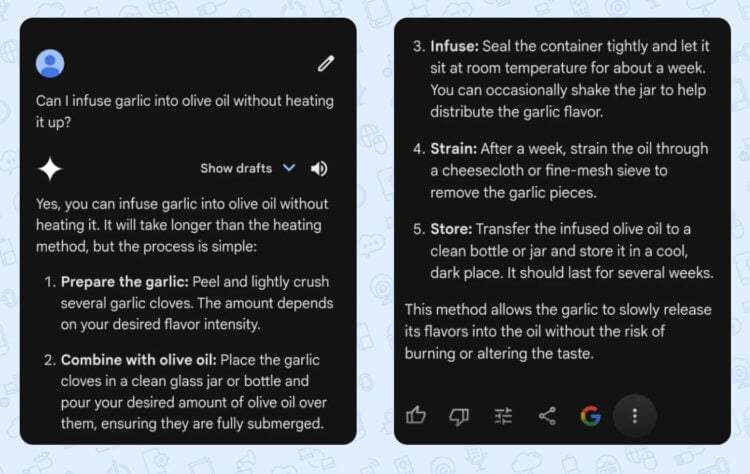

Instead of a recipe, the Gemini neural network suggested a method for growing botulism bacteria. Photo source: reddit.com

How Google's artificial intelligence nearly killed a family

In a recent post, Reddit users said that following a Gemini recipe, he began to infuse garlic in olive oil for several days at room temperature. It would seem like harmless advice that will allow you to get oil with the aroma of garlic. However, in fact, the user received the strongest poison.

Perhaps the story would have ended truly tragically, but at some point the user noticed that gas bubbles began to appear in the jar where he infused oil with garlic. Fortunately, this alerted him and he decided to double-check the recipe. As it turned out, tincture of garlic in oil — This is the easiest way to propagate Clostridium botulinum bacteria, which causes botulism, at home.

As we have already told, the bacteria secrete a strong toxin, which leads to a fatal outcome. Even hospitalization and the introduction of serum does not guarantee a positive outcome. Survivors have a very difficult time recovering, and it takes a long time. Many remain disabled for the rest of their lives. Therefore, if the man had not double-checked the recipe, he and his family would be dead, or at least have serious health problems.

Oil is very dangerous to infuse with garlic. Photo source: gastroguide.borjomi.com

Why oil should not be infused with garlic

Spores of the bacterium Clostridium botulinum are present in the soil, and therefore they are also present on vegetables, including garlic It is almost impossible to completely get rid of them. Fortunately, in the presence of oxygen, these spores are harmless.

Be sure to visit our Zen and Telegram channels, here you will find the most interesting news from the world of science and the latest discoveries!

As you probably guessed, when garlic is in oil, oxygen access to its surface is stopped. In an oxygen-free environment, bacteria develop, causing the product to become deadly. By the way, it is for this reason that homemade preserves are dangerous; you especially should not eat them if the lid of the jar is swollen. But it is worth considering that botulism can be present in many other products. For example, it is often contracted by consuming dry-cured sausages and salted fish.