More than 50 leading experts in the field of development of artificial intelligence announced its intention to boycott the Korean Institute of advanced technology (KAIST) is a prestigious and leading educational and research University in South Korea for the establishment of a laboratory for the development of weapons using artificial intelligence. This they said in an open letter.

Indignation of experts from the field of AI were called and KAIST signed an agreement with the company Hanwha weapons Systems, which, according to various sources, engaged in the development of powerful weapons, including, inter alia, has cluster bombs, prohibited under the UN Convention on cluster munitions (South Korea this Treaty).

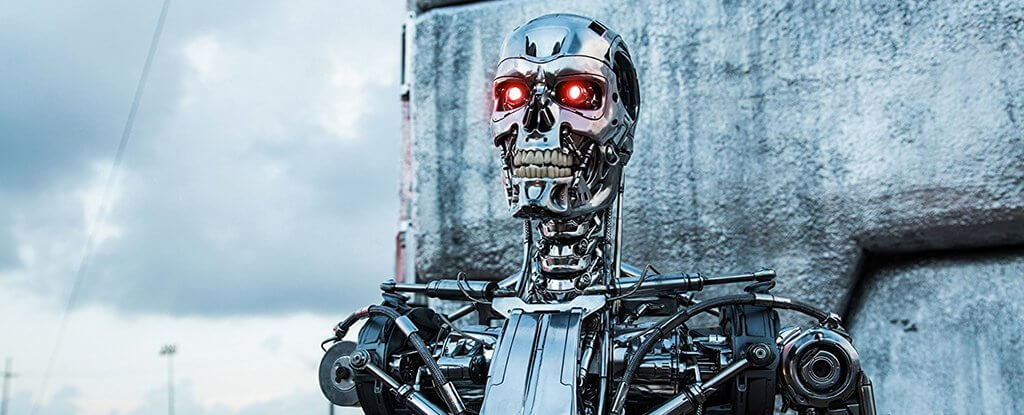

“While the United Nations is engaged in discussions on how to contain the threat to international security posed by Autonomous weapons, it is a regrettable fact that such a prestigious University like KAIST, looking for ways to accelerate the arms race through the development of such new types of weapons,” write the experts of the AI in the open letter.

“We therefore publicly declare that we will boycott all kinds of cooperation with all departments of KAIST as long as the President of KAIST does not provide the assurance that the center will not engage in the development of Autonomous weapons, whose functioning is possible without significant human intervention”.

KAIST has at its disposal some of the best in the world of robotic and science laboratory: it conducts research and development of various advanced technologies, ranging from liquid batteries and medical equipment.

The head of the KAIST Shin sung-Chul said he was saddened by the decision to boycott, adding that the Institute is not going to create killer robots.

“As an academic institution, we appreciate the human rights and ethical standards. KAIST is not going to carry out research activities contrary to the principles of human dignity, including to develop Autonomous weapons that do not require human control,” commented Shin sung-Chol in his official statement about the boycott.

Given the rapid pace of development of hardware and software, many experts are concerned that sooner or later we will create something that won’t be able to control. Elon Musk, Steve Wozniak, recently deceased Stephen Hawking and many other very prominent figures in the field of IT-technologies at the time, urged governments and the UN to impose restrictions on the development of weapons systems, AI.

According to many, if we come to missiles and bombs, whose functioning will be possible independently of human control, the consequences can be devastating, especially if such technology fell into the wrong hands.

“Creating Autonomous weapons will lead to the third revolution in military Affairs. This technology will allow a more rapid, devastating and large-scale military conflict”, — the authors of the open letter.

“These technologies have the potential of becoming a weapon of terror. Dictators and terrorists can use them against innocent populations, ignoring any ethical considerations. This is a real Pandora’s box, which is close, if open, would be almost impossible.”

Members of the UN are going to hold next week’s meeting, which will discuss issues related to the prohibition of lethal Autonomous weapons, capable of killing without human intervention. By the way, it will be already far not the first such meeting, however the discussion has not yet come to any agreement. One of the problems standing in the way of making any specific decisions, is that some countries just don’t mind of development of such technologies because they can increase their military potential. Become a stumbling block and private companies that actively resist the control of the governments for development their own models of systems of artificial intelligence.

It would seem that many agree with the fact that killer robots are bad. However, to take any specific decisions as to what to do with their development, it still does not work. It is possible that the development of new weapons systems, which plans to take the KAIST, will make more of the parties to participate in the necessary discussion of the problem.

Many scientists calling for the creation of recommendation guidelines development and deployment of AI systems. However, a high probability that even in the presence of these would be very difficult to ensure that each party follow them.

“We are obsessed with the arms race, which nobody wants. KAIST actions will only accelerate the arms race. We can’t tolerate it. I hope that this boycott will increase the importance of the need to discuss the matter at the UN on Monday,” commented Professor on artificial intelligence of the Australian University of New South Wales Toby Walsh, also signed the letter.

“This is a clear appeal to the community involved in the development of technologies of artificial intelligence and robotics — not to support the development of Autonomous weapons. Any other institutions wishing to open similar labs should think twice.”

A South Korean Institute has announced a boycott for the development of armaments on the basis of the AI

Nikolai Khizhnyak