Virtual reality is not limited to the world of entertainment. It is taken on arms and in more practical fields, for example for assembling parts of a car engine or to allow people to “try out” new-fangled trends, being at home. And yet this technology is still difficult to solve the problems of human perception. It is obvious that virtual reality is a pretty cool app. At the University of Bath it is used for exercise; imagine that going to the gym to take part in the “Tour de France” and to go along with the best cyclists in the world.

Virtual reality in the technical sense gets bad with human perception. That is how we perceive information about the world and build understanding of it. Our perception of reality determines our decisions and for the most part, relies on our senses. Therefore, the creation of an interactive system should involve consideration of not only hardware and software but also the people themselves.

It is very difficult to solve the problem of designing virtual reality systems that will carry people to new worlds, with an acceptable sense of presence. The more complicated the virtual reality experience, the harder it becomes to quantify the contribution of each element of the experience in one’s perception of the headset of virtual reality.

When watching a film in a 360-degree review, in virtual reality, for example, how would we determine that is more conducive to the involvement in the viewing of the film: the computer graphics (CGI) or surround sound? VR has to learn the technique of knife and axe, cutting out the unnecessary and chopping off the excess before adding new elements, evaluating the effect of their appearance on the perception of the person.

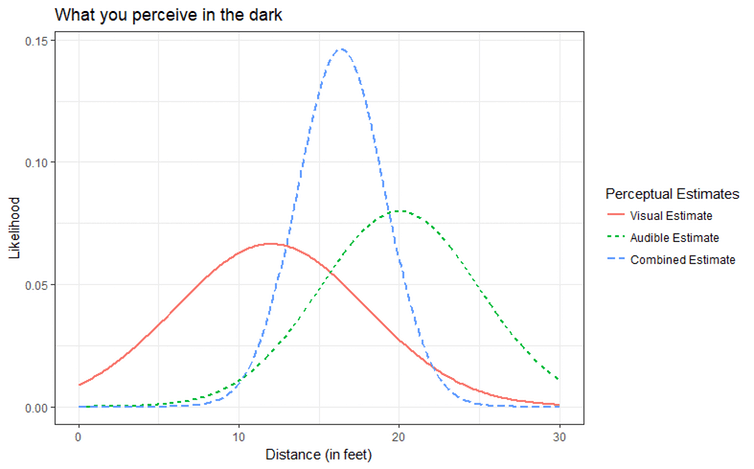

There is a theory at the interface of computer science and psychology. Maximum likelihood estimation explains how we combine the information we receive from all your senses, integrating it to inform their understanding of the environment. In its simplest form, the theory claims that we optimally combine sensory information; each sense contributes to the environmental assessment, but in General it’s a pretty noisy process.

Noisy signals

Imagine a man with good hearing, walking at night in a quiet side street. He sees a dark shadow in the distance and hears the distinct sound of footsteps coming toward him. However, this person cannot be sure of what he sees, because of the “noise” in the signal (because dark). He relies on hearing, because the quiet environment means that the sound in this example is a more reliable signal.

This scenario is shown in the image below: as assessment involving the eyes and ears of a man combined, to give optimum output somewhere in the middle.

Of course, this can not go unnoticed for developers of virtual reality. Scientists from the University of Bath has used this method for the solution of the problems of assessing people distances when using VR headsets. Driving simulator, where people are learning to drive, may lead to compression of distances in virtual reality, which can lead to misuse of the environment in which it is necessary to consider the risk factor.

Understanding how people integrate information from their senses, is crucial for long-term success of VR, because it is not only the visual part. Maximum likelihood estimation helps to simulate how effectively the virtual reality system should render a multi-sensory environment. A better knowledge of human perception will lead to even more immersive VR experience.

Simply put, the question is not how to separate signals from noise; the question is, to perceive all the signals with noise and to get the highest quality virtual environment.

How to solve the problem of human perception of virtual reality?

Ilya Hel