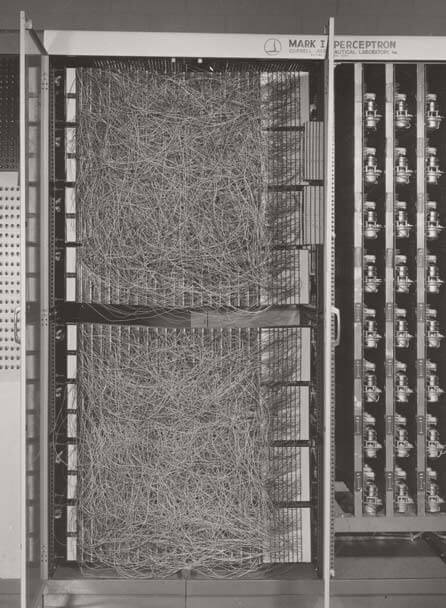

As an independent discipline computer vision originated in the early 50-ies of the last century. In 1951 John von Neumann proposed to analyze microsemi using computers by comparing the brightness of adjacent parts of the image. In 60-ies began research in the field of pattern recognition, machine and handwriting. Then they made the first attempts at modeling the neural network. The first device is able to recognize letters, was the development of Frank Rosenblatt, the perceptron. And in the 70’s, scientists began to study the human visual system, with the aim of formalization and implementation in the algorithms. This approach was intended to allow recognition of objects in images. How does modern computer vision about this in the news today.

Thus, computer vision is a set of methods to train a machine to extract information from image or video. To the computer and found images of certain objects, it is necessary to teach. This is a huge training sample, for example, from the photos, some of which contain the desired object, and the other part — on the contrary, does not contain. Then it comes in machine learning. The computer analyzes the image from the sample, determines which signs and their combinations indicate the presence of the objects, and calculates their significance.

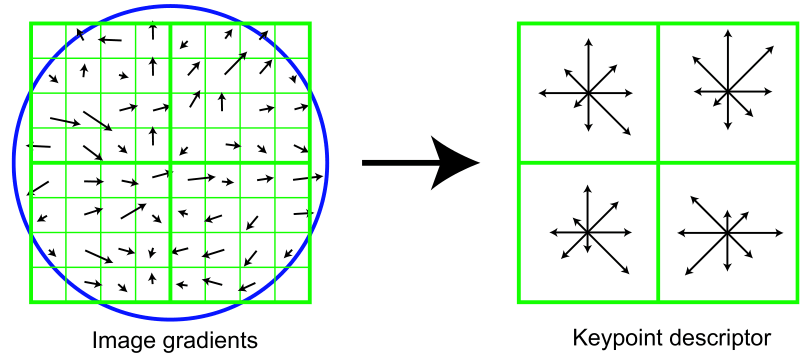

After training, computer vision can be applied in the case. For a computer an image is a set of pixels, each of which has its own brightness value or color. The machine was able to get an idea about the content of the picture, it is processed using special algorithms. First, identify potentially significant places. This can be done in several ways. For example, the original image several times is subjected to the Gaussian blur, using a different blur radius. The results are then compared with each other. This allows you to identify the most contrasting fragments of the bright spots and the fractured lines.

After significant places found, the computer describes them in numbers. The recording of the fragment of the image in numerical form is called a descriptor. Using the descriptors, we can accurately compare the image fragments without the use of fragments themselves. To speed up the computation, the computer performs clustering or the distribution of descriptors in groups. In the same cluster get similar descriptors from different images. After clustering becomes important only the number of cluster descriptors, the most similar to this. The transition from the handle to the cluster number is called quantization, and the cluster number — quantized descriptor. Quantization essentially reduces the amount of data that must be processed computer.

Based on the quantized descriptors, the computer can compare the images and to recognize objects. He compares the sets of quantized descriptors from different images and makes a conclusion as to how they or their individual fragments are similar. This comparison is also used by search engines to search for an image you upload.

How does it work? | Computer vision

Hi-News.ru