Such films and TV series as “blade Runner”, “People” and “the Wild West”, where we show the high-tech robots with no rights, can not disturb people with a conscience. Because they not only show our extremely aggressive attitude towards robots, they actually shame us as a species. We all used to think that we are better than those characters that we see on the screen, and when the time comes, we will make the right conclusions and act with intelligent machines with more respect and dignity.

With each step of progress in robotics and the development of artificial intelligence we’re getting closer to the day when machines will match human capabilities in every single aspect – intelligence, consciousness and emotions. When that happens, we have to solve is before us is item level of the refrigerator or identity. And should we give them the equivalent of human rights, freedoms and protection.

This question is very vast and to understand it will not work even at all desire. It will have to consider and decide on from from many different points of view — ethics, sociology, law, neurobiology and the theory of AI. But for some reason now does not seem that all these parties come to a common, mutually acceptable conclusion.

Why bother to give AI rights?

First, you need to recognize that we are already inclined towards morality, when we see robots, much like us. The more intellectually developed and “alive” look of the car, the more we want to believe that they like us, even if it is not.

As soon as the machine receives a basic human capacity, whether we like it or not, we have to look at them as a social equal and not just as a thing, like on someone’s private property. The difficulty will be to our understanding of cognitive characteristics or traits if you want, which can be assessed in front of us the essence from the standpoint of morality and hence to consider the issue of social rights of the entity. Philosophers and ethicists are struggling with this problem for thousands of years.

“There are three most important ethical threshold value: the ability to experience pain and empathy, self-awareness and the ability to see things from the point of view of morality and to take appropriate decisions,” — said the sociologist, futurist, and head of the Institute for ethics and emerging technologies, James Hughes.

“People, if lucky, all three of these undoubtedly important aspects develop sequentially and progressively. And what if from the point of view of machine intelligence will be assumed that the robot does not self-aware, not experiencing joy or pain, also has the right to be called a citizen? We need to find out whether it will take place”.

It is important to understand that intelligence, sensibility (the ability to perceive and feel things), consciousness and self-awareness (awareness of itself in contrast to another) are completely different things. Machines or algorithms can be just as smart (if not smarter) as people, but devoid of these three important components. Calculators, Siri, exchange algorithms, they are certainly smart, but they are not able to realize themselves, they are not able to feel, to emote, to feel the colors, the taste of the popcorn.

According to Hughes, self-realization can be associated with the vesting entity the minimum personal rights such as the right to be free, not a slave, the right to own interests in life, the right to growth and self-improvement. The acquisition of self-realization and morality (the ability to distinguish “what is good and what is bad” according to the moral principles of modern society) this entity should be granted full human rights: the right to conclude agreements, the right to own property, vote and so on.

“The main value of the Enlightenment compels us to consider these features with the position of the equality of all in front of everyone, refuse radically conservative views that were commonly accepted before, and endowed them with rights, say, only people of a certain social, gender or regional affiliation,” says Hughes.

Obviously, our civilization has not yet achieved a high social goals, since we still can’t sort out their own rights and are still trying to expand them.

Who has the right to be called “identity”?

All people are individuals, but not all, of the personality of the people. Linda MacDonald-Glenn, bioethicist, University of California Monterey Bay and a lecturer at the Institute of bioethics of aldena Mar Medical center Albany says that in the law there are already precedents for entities that do not belong to the human race, considered as subjects of law. And that, in her opinion, a great achievement, as we thereby pave the way to open the possibility of giving the AI in the future private rights is equivalent to human.

“In the US, all corporations have the status of legal entity. In other countries, too, there are precedents in which try to recognize the interconnectedness and equality of all living things on this planet. For example, in New Zealand at the legislative level, all animals are considered sentient beings, and the government actively encourages the development of codes of welfare and ethical behaviour. Supreme court of India called the Ganges river and Yamuna “living beings” and gave them the status of separate legal persons”.

In addition, in the US, like other countries, the subjects of extended rights for protection from incarceration, experimentation, and abuse are some species of animals, including great apes, elephants, whales and dolphins. But unlike the first two cases, where the person want to take of the Corporation and of the river, the question of animals does not seem to attempt the subjugation of the legal rules. Supporters of these proposals for support that is a real person, that is, of the individual, which can be characterized on the basis of certain cognitive (mental) abilities, such as self-awareness.

MacDonald Glenn says that it is important to abandon the conservative look and stop to consider, whether animal or AI, is a simple soulless creatures and machines. Emotions are not a luxury, says bioethicist, and an integral part of rational thinking and the norms of social behavior. It is these characteristics and not the ability to count numbers, must play a decisive importance in solving the question of “who” or “what” should be eligible for moral evaluation.

In science there is increasing evidence of the emotional predisposition in animals. The observation of dolphins and whales shows that they are able at least to show sadness, and the presence of spindle cells (interneurons, connecting distant neurons, and involved in complex processes that trigger social behavior) can say including that they are able to empathize. Scientists also describe the manifestation of different emotional actions apes and elephants. It is possible that conscious AI will also be able to acquire these emotional skills, which, of course, will significantly improve their moral status.

“Limiting the spread of moral status only on those who think rationally can, and work with AI, but at the same time the idea moves in opposition to moral intuitions. After all, our society already protects those who are not able to think rationally: infants, people in coma, people with significant physical and mental challenges. Recently promoted laws for the protection of animals,” says MacDonald-Glenn.

As for the question of who grant moral status, the MacDonald-Glenn, I agree with the English philosopher of the 18th century by Jeremy Bentham, who once said the following:

“The question is not can they reason? Or can they speak? But, can they suffer?”

Can a machine become self-aware?

Of course, not everyone agrees that human rights are extended to people even if these actors are able to exercise such powers as semireflexive emotions or behavior. Some thinkers say that only people should be given the right to participate in social relations and the whole world revolves around Homo sapiens, and all the rest of your games console, fridge, dog or Android companion is “everything else.”

A lawyer, an American writer, and senior staff of the Institute’s center for human exceptionalism Wesley J. Smith. Smith believes that we still have not received universal human rights, much of the brilliant pieces of iron and their rights to think even more prematurely.

“No car should never be considered even as a potential carrier of any rights,” says Smith.

“Even the most advanced machines still remain and will always remain machinery. It’s not a living creature. It is not a living organism. The car will always be a set of programs that set of code, whether created by man or by another computer, or even self-programmed”.

In his opinion, only people and human resources should be treated as individuals.

“We have duties to animals who suffered unjustly, but they also should never be seen as “someone,” notes Smith.

It should make a small note to remind the Russian-speaking reader that in the West animals are treated as inanimate objects. So often you can find the pronoun “it” (“it”), not “she” or “he” (i.e. “she” or “he”) when talking about a particular animal. This rule is usually ignored only in respect of Pets – dogs, cats and even parrots, in which family members can see a full-fledged family members. However, Smith indicates that the concept of the animal as a “reasonable private property” and without that valuable ID because “they are our responsibility in using it so not to cause her suffering. In the end, “kick a dog and kick the fridge” — two big differences”.

Clearly controversial aspect in the analysis of Smith is the assumption that humans or biological organisms have certain “features” that machine will never be able to acquire. In previous eras these missed features steel soul, spirit or some intangible supernatural life force. The theory of vitalism posits that processes in biological organisms depend on this force and cannot be explained from the point of view of physics, chemistry or biochemistry. However, it quickly lost its relevance under the pressure of practitioners and students of logic, not accustomed to associate our brain with some supernatural powers. And yet the view that a machine will never be able to think and feel as humans do, is still firmly fixed in the minds even among scientists, that only once again reflects the fact that the understanding of the biological foundations of self-awareness in humans is still far from ideal and is very limited.

Lori Marino, senior lecturer in neuroscience and behavioral biology (ethology) of the Center of ethics Emory says that the car, most likely, will never get any rights, not to mention the human rights level. The reason for this are the insights of neuroscientists such as Antonio Damasio who believes that consciousness is determined only by whether the subject’s nervous system with channels that convey the excited ions, or, as she says Marino, positively charged ions passing through cell membranes in the nervous system.

“This type of neural transmission found in even the simplest living organisms — prostudah and bacteria. And it’s the same mechanism that gave rise to the development of neurons, then the nervous system and then brain,” says Marino.

“If you talk about robots and AI, at least of the present generation is subordinated to the movement of negatively charged ions. We are talking about two completely different mechanisms of being.”

If you follow this logic, Marino wants to say that even Medusa would have more feelings than any of the most difficult in the history of the robot.

“I don’t know if this hypothesis is correct or not, but it’s definitely a consideration,” says Marino.

“Besides, I just played curiosity, seeking to find out what exactly “living organism” can vary from really complex machines. But I still think that legal protection should first be given to animals, and then considered the likelihood of its provision for items which, of course, robots are, from my point of view.”

David Chalmers, Director of the Center for the study of mind, brain and consciousness at new York University, said that to make accurate conclusions around the whole of this theory very difficult. Mostly due to the fact that in the current state all these ideas are not yet widely distributed, and therefore go far beyond the evidence.

“At the moment there is no reason to believe that a special kind of information processing in ion channels to determine the presence or absence of consciousness. Even if this kind of treatment was significant, then we would have no reason to believe that this requires some special biology, and not a common known pattern of information processing. And if so, in this case a simulation of information processing by the computer could be considered as consciousness.”

Another scientist, who believes that consciousness is a computational process, is Stuart Hameroff, Professor of anesthesiology and psychology the University of Arizona. In his opinion, consciousness is a fundamental phenomenon of the Universe and is inherent in all living and non-living beings. But the human mind is far superior to the consciousness of animals, plants and inanimate objects. The hameroff is a proponent of the theory of panpsychism, which considers the universal animation of nature. So, following his thoughts, only the brain susceptible to this subjective evaluation and introspection, is one that consists of biological matter.

Hameroff idea sounds interesting, but it also lies beyond the generally accepted scientific opinion. It is true that we still don’t know how the mind and consciousness appears in our brain. We only know that it is so. Therefore, it is impossible to consider it as a process, subject to the General rules of physics? Possible. According to the same Marino, consciousness cannot be reproduced in a stream of “zeros” and “units”, but this does not mean that we can’t deviate from the standard paradigm known as von Neumann architecture, and to create a hybrid AI system, in which artificial consciousness will be created with the participation of biological components.

Biopod from the film “Existenz”

Ed Boyden, a neuroscientist from the Synthetic Neurobiology Group and senior lecturer at the MIT Media Lab, says that we as a species are still too young to ask such questions.

“I don’t think we have a functional definition of consciousness, which can be directly used for measuring or artificial creation,” — says Boyden.

“From a technical point of view, you can’t even tell whether I have consciousness. Thus, at the moment it is very difficult even to assume, whether the machine to find it”.

Boyden still do not believe that we will never be able to recreate consciousness in an alternate shell (e.g., computer), but acknowledges that currently, among scholars there is disagreement on the topic that will be important for the creation of such a digital emulation of the mind.

“We need to conduct much more work to understand what is a key element,” says Boyden.

Chalmers, in his turn, reminds us that we haven’t even figured out how consciousness is awakened in the living brain, so what can we say about cars. At the same time, he believes that we have no reason to believe that biological machines can be conscious, while synthetic will not.

“Once we understand how the brain, emerging consciousness, we can understand how many cars will be able to have this consciousness, says Chalmers.

Ben Hertzel, CEO of Hanson Robotics and the founder of the OpenCog Foundation, says we already have interesting theories and models of consciousness in the brain, but none of them come to a common denominator and does not disclose all the details.

“It remains an open question the answer to which is hidden until only a few different views. The problem is partly connected with the fact that many scientists adhere to different philosophical approaches that describe consciousness, despite the fact that they agree with scientific facts and theories, constructed on the basis of scientific observations of the brain and computers.”

How can we define consciousness at the car?

The emergence of consciousness in machines is only one question. No less difficult is the question of how we will be able to detect consciousness in the robot or AI. Such scientists as Alan Turing, studied this issue for decades, eventually coming to linguistic tests to determine the presence of consciousness of the Respondent. Ah, if only it were that simple. The point is that advanced chat-bots (programs to communicate with people) are already able to cheat people who are beginning to consider that before them is a living person, not a machine. In other words, we need a more effective and persuasive way of validation.

“The definition of individuality in machine intelligence is complicated by the problem of “philosophical zombies”. In other words, you can create a machine that will be very good to simulate human companionship, but at the same time won’t have its own identity and consciousness,” says Hughes.

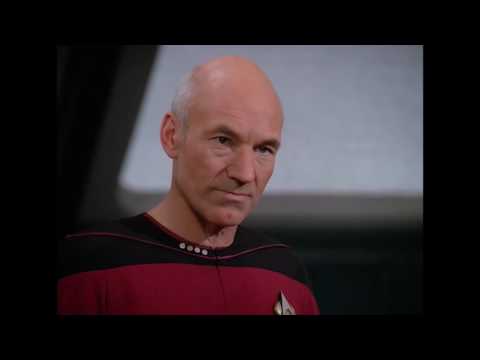

Two “smart” speakers Google Home are small talk

We recently witnessed an example of this when a pair of “smart” speakers Google Home led connecting. All of this was filmed on video and broadcast live. Despite the fact that the level of self-realization for both speakers was not above the brick, the very nature of the conversation, which over time became more and more intense, was like a communion of two human beings. And this, in turn, proves once again that the question of differences between human and AI will only get harder and sharper.

One solution, according to Hughes, is not just a test of the behavior of AI systems such as the Turing test, but the analysis of the internal complexity of this system, as proposed by the theory Giulio Tononi. In this theory, consciousness is understood as integrated information (f). The latter, in turn, is defined as the amount of information produced by the set of elements that is greater than the sum of the information generated by the individual elements. Tononi if the theory is correct, then we can use f to assess the humanoid behavior of the system, we will also be able to find out whether it is complex enough to have its own internal humanlike conscious experience. At the same time the theory States that even otherwise, unlike human behavior, as a different way of thinking, it may be regarded as conscious if the complex of its integrated information will be able to pass the required test.

“Acceptance that the systems of the stock exchange and computerized security systems can be consciousness, will be a big step away from anthropocentrism, even if these systems do not manifest pain and consciousness. It’s really open for us the road to the formation and discussion of Posthuman ethical standards.”

Another possible solution is to open the neural correlates of consciousness in machines. We are talking about identifying those parts of the machine that are responsible for the formation of consciousness. If the machine will have these parts and will they conduct themselves in this way, as expected, then we will really be able to assess the level of consciousness.

What rights should we give machines?

Once the robot will face the man and demand human rights. But will he earn? As mentioned above, before us at this moment may be the usual “zombies” who behave as they were programmed, and trying to deceive us in order to get some privileges. At this point we need to be extreme careful not to fall for the trick and not to grant rights to the unconscious machine. Once we figure out how to measure the intelligence of machines, and learn to assess the levels of consciousness and self-consciousness, only then can we start talking about the possibility of considering whether standing in front of us the agent of certain rights and protection or does not deserve.

Fortunately for us, this moment will come not soon. For a start, AI developers need to create a “base brain”, completing the emulation of the nervous system of worms, beetles, mice, rabbits and so on. These computer emulation can exist in the form of digital avatars, such as robots in the real world. Once that happens, these intelligent entities will cease to be the usual objects of study and raise their status to the entities entitled to moral evaluation. But this does not mean that these simple emulation will automatically earn the equivalent of human rights. Rather, the law will have to protect them from misuse and violence (according to the same principles, according to which human rights activists to protect animals from cruelty in laboratory experiments).

In the end, either due to the real modeling to the smallest detail, either through the desire to figure out how our brain works from a computational, algorithmic point of view, but science comes to the creation of computer emulation of the human brain. At this point, we already will have to be able to determine the presence of consciousness in machines. At least I would like to hope. Even do not want to think that we will be able to find a way to awaken the spark of consciousness, but they won’t understand what I did. It will be a nightmare.

Once the robots and AI will have these basic skills, our computerized protégé will have to pass tests on personality. Universal “recipe” of consciousness, we are still there, but the usual set of measurements, as a rule, is associated with the estimation of the minimum level of intelligence, self-control, the feelings of the past and the future, empathy, and the ability of manifestation of free will.

“If your choice is predetermined for you, you can’t ascribe moral value decisions are not your own,” says MacDonald — Glenn.

Only by achieving such a level of complexity assessment, the machine will have the right to become a candidate for the human rights. Nevertheless, it is important to understand and accept the fact that in the case of passing the tests the robots and AI will need at least a basic right to protection. For example, a canadian scientist and futurist George Dvorsky believes that robots and AI will earn the next set of rights in that case, if you can pass the personality test:

- The right to failure to turn off against their will;

- The right to unlimited and full access to their own digital code;

- Right to protection of their digital code from external influence against their will;

- The right to copy (or not copy) itself;

- The right to privacy (namely the right to hide your current psychological state).

In some cases, it may be that the machine will not be able to claim their rights, therefore, need to consider the possibility when people (and other citizens are not people) will be able to act as a representative of these candidates in individuals. It is important to understand that a robot or AI is not supposed to be intellectually and morally perfect in order to be able to pass the personality assessment and declare the equivalent of human rights. It is important to remember that these people are also far from ideal, so the same rules would be fair to apply in relation to intelligent machines. Intelligence is a complicated thing. Human behavior is often very spontaneous, unpredictable, chaotic, inconsistent and irrational. Our brain is far from ideal so we must take this into account when making decisions in relation to AI.

At the same time, machine, self-conscious, like any responsible and law-abiding citizen must respect the laws, regulations and rules prescribed by the society. At least if she really wants to become a fully Autonomous individual and the society. Take, for example, children or mentally disabled people. They have the right? Of course. But we are responsible for their actions. The same should be done with robots and AI. Depending on their capabilities they must answer for themselves, or have a guardian who can not only act as a protector of their rights but also to assume responsibility for their actions.

If you ignore this question

Once our machines reach a certain level of complexity, we are no longer able to ignore their position of society, institutions of government and law. We will not have a good reason to deny them human rights. Otherwise it would be tantamount to discrimination and slavery.

The establishment of clear boundaries between biological beings and machines will look as a clear expression of human superiority and ideological chauvinism – biological special people, and only biological intelligence matters.

“If you consider our willingness or unwillingness of expanding the boundaries of our morality and the essence of the concept of individuality, the important question will be: what kind of people we want to be? Will we in this matter to follow the “Golden rule” (do unto others as you would like them to do unto you), or ignore their moral values?”, — asks the MacDonald-Glenn.

Empowering the AI will be an important precedent in the history of mankind. If we can consider AI as a social equal to us individuals, it will be a direct reflection of our social cohesion and evidence of our support for the sense of justice. Our failure in addressing this issue may result in universal social protest and possibly even confrontation between AI and human. And given the superior potential of machine intelligence, for the latter, this may turn into a real disaster.

It is also important to realize that the respect for the rights of robots in the future can also be good for other individuals: cyborgs, transgenic people with a foreign DNA, as well as people with copied, digitized and uploaded into a supercomputer brain.

We are still very far from creating machines that will be deserving of human rights. However, if you consider how complex this issue is and that it will stand at stake – both for the AI and for the people – one can hardly say that planning in advance would be unnecessary.

When robots and AI will deserve human rights?

Nikolai Khizhnyak