A division of Google, dealing with the development of artificial intelligence, announced the creation of a new method of training neural networks, combining the use of advanced algorithms and old video games. The quality of the learning environment used in the old Atari video games.

The developers at DeepMind (recall that these people have created a neural network AlphaGo, repeatedly winning the best players in a logical game of go) believe that machines can learn just like people. Using a training system DMLab-30, based on the shooter Quake III and arcade games Atari (used 57 different games), the engineers developed a new machine learning algorithm IMPALA (Importance Weighted Actor-Learner Architectures). It allows individual parts to study the performance of multiple tasks, and then to share knowledge among themselves.

In many respects the new system was based on the earlier architectural systems A3C (Asynchronous Actor-Critic Agents) in which some agents are exploring the environment, then the process is suspended and they exchange knowledge with the Central component, a “disciple”. As for the IMPALA, then it is agents can be more, and the learning process is a little different. In it, the agents send information from two “disciples”, which then exchange data with each other. In addition, if A3C computing the gradient of the loss function (in other words, the discrepancy between predicted and obtained values of the parameters) is done by the agents, which send information to the Central nucleus, in IMPALA this task is performed by “students”.

An example of the passing game man:

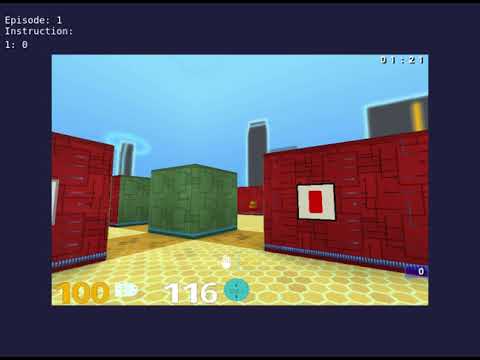

Here it is shown how the same task system IMPALA:

One of the main problems in the development of AI is the time and the need for high computing power. Even in the context of Autonomous cars need a set of rules that they could follow during their own experiments and finding solutions to problems. Since we can’t just build robots and release them into the wild to learn, developers use simulation methods and deep learning.

To modern neural network was able to learn something, they have to process a huge amount of information, in this case — billions of frames. And the sooner they do it, the less time is spent on learning.

According to the DeepMind, in the presence of a sufficient number of processors IMPALA achieves performance of 250 000 frames/s, or 21 billion frames per day. This is an absolute record for this kind of tasks, according to the portal The Next Web. Developers themselves comment on what their AI system does the job better than the same machines and people.

In the future, these AI algorithms can be used in robotics. By optimising systems, machine learning, robots will be faster to adapt to the environment and to work more effectively.

Artificial intelligence has become to learn 10 times faster and more efficiently

Nikolai Khizhnyak