Artificial intelligence. How much is said about him, but we even say it has not really begun. Almost everything you hear on the progress of artificial intelligence, based on the breakthrough, which thirty years. Maintaining progress will require bypass serious limitations serious limitations. Further, in the first person — James Somers.

I stand there, what will soon be the center of the world, or just in a big room on the seventh floor of a gleaming tower in downtown Toronto — which side to see. I am accompanied by Jordan Jacobs, co-founder of this place: Institute of Vector, which this fall opens its doors and promises to be the global epicentre of artificial intelligence.

We are in Toronto, because Geoffrey Hinton in Toronto. Geoffrey Hinton is the father of deep learning, techniques, underlying the hype on the subject of AI. “In 30 years we will look back and say that Jeff — Einstein AI, deep learning, just that we call artificial intelligence,” says Jacobs. Of all the AI researchers Hinton quoted more often than the three behind him, combined. Its students and graduates go to work in the AI lab in Apple, Facebook and OpenAI; Sam Hinton, a leading scientist at Google Brain AI. Virtually any achievement in the field of AI over the past ten years — in translation, speech recognition, image recognition and games — or work of Hinton.

Institute the Vector, this monument to the ascent of ideas of Hinton, is a research centre in which companies from all over the US and Canada — like Google, Uber, and NVIDIA are sponsoring the efforts of technology commercialization AI. The money flow faster than Jacobs manages to ask about it; two of its co-founders interviewed companies in Toronto and the demand for experts in the field of AI was 10 times higher than Canada delivers each year. Institute the Vector in a sense untilled virgin soil to attempt to mobilize the world around deep learning: in order to invest in this technique to teach her to hone and apply. Data centers are being built, skyscrapers are filled with startups, to join an entire generation of students.

When you stand on the floor, “Vector”, one gets the feeling that you are at the beginning of something. But deep learning, in its essence, very old. A breakthrough article by Hinton, written together with David Rumelhart and Ronald Williams, was published in 1986. The work describes in detail the method of back propagation of errors (backpropagation), “backprop” for short. Backprop, according to John Cohen, is “all based on what deep learning — everything”.

If you look at the root, today AI is deep learning, and deep learning is backprop. And it’s amazing, given that backprop more than 30 years. To understand how it happened, just need: as the equipment could wait that long and then cause an explosion? Because once you learn the history of backprop, you will understand what is happening with AI, and also that we may not stand at the beginning of the revolution. We may in the end itself.

Walk from the Institute of Vector in office Hinton at Google, where he spends most of his time (he is now Professor Emeritus at the University of Toronto) is a kind of living advertisement for the city, at least in the summer. It becomes clear why Hinton, who hails from the UK, moved here in the 1980s after working in the University of Carnegie Mellon in Pittsburgh.

Maybe we are not the beginning of the revolution

Toronto — the fourth largest city in North America (after Mexico city, new York and Los Angeles) and certainly more varied: more than half the population was born outside Canada. And this can be seen when walking around the city. The crowd is multinational. There is free health care and good schools, people are friendly, the policy with respect to the left and stable; all this attracts people like Hinton, who says he left the U.S. because of the “Irangate” (Iran-contra — a major political scandal in the United States in the second half of 1980-ies; then it became known that some members of the U.S. administration organized secret arms shipments to Iran, thereby violating the arms embargo against that country). This begins our conversation before dinner.

“Many believed that the US could invade Nicaragua,” he says. “They somehow believed that Nicaragua belongs to the United States”. He says that recently achieved a major breakthrough in the project: “I began working With a very good Junior engineer”, a woman named Sarah Sabur. Sabur Iranian, and she was denied a visa to work in the United States. The Google office in Toronto pulled her.

The Hinton 69 years. He has sharp, thin English face with a thin mouth, large ears, and a proud nose. He was born in Wimbledon and in the conversation reminds the narrator of a children’s book about science: a curious, enticing, trying to explain. He’s funny and a little grandstanding. It hurts to sit because of back problems, so he can’t fly, and the dentist lays on the device, resembling a surfboard.

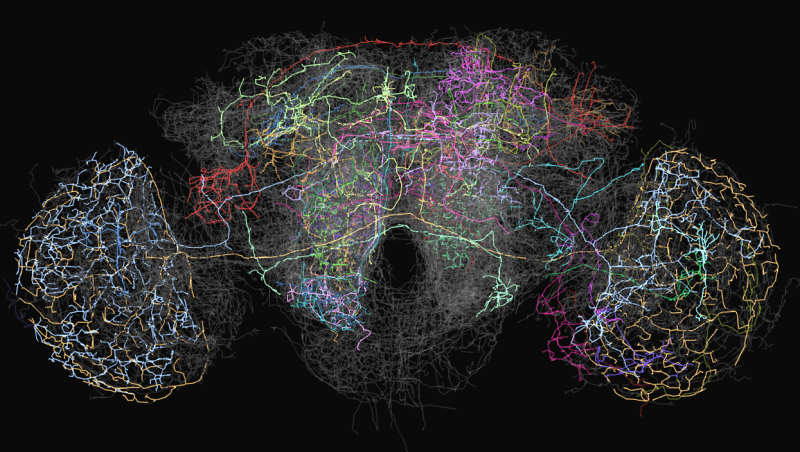

In the 1980s, Hinton was, as now, an expert in neural networks, is considerably simplified network model of neurons and synapses of our brain. However, at that time, it was firmly decided that the neural network is a dead end in AI research. Although the first neural network, “Perceptron” was developed in the 1960-ies and it was considered a first step towards machine intelligence at the human level, in 1969 Marvin Minsky and Seymour Papert mathematically proved that such networks can perform only the simplest functions. These networks had two layers of neurons: input layer and output layer. Network with a large number of layers of neurons between the input and output could, in theory, solve a wide variety of problems, but no one knew how to teach them, so that in practice they were useless. Because of “Perceptrons” from the idea of neural networks refused almost all with a few exceptions, including Hinton.

Breakthrough Hinton in 1986 was to show that the method of error back propagation can train a deep neural network with number of layers more than two or three. But it took another 26 years before increased computing power. In the article of 2012 Hinton and two of his student from Toronto has shown that deep neural networks are trained with the use of backprop, spared the best of the system of recognition of images. The “deep learning” has started to gain momentum. The world suddenly decided that the AI will take over. For Hinton, it was a long-awaited victory.

The reality distortion field of

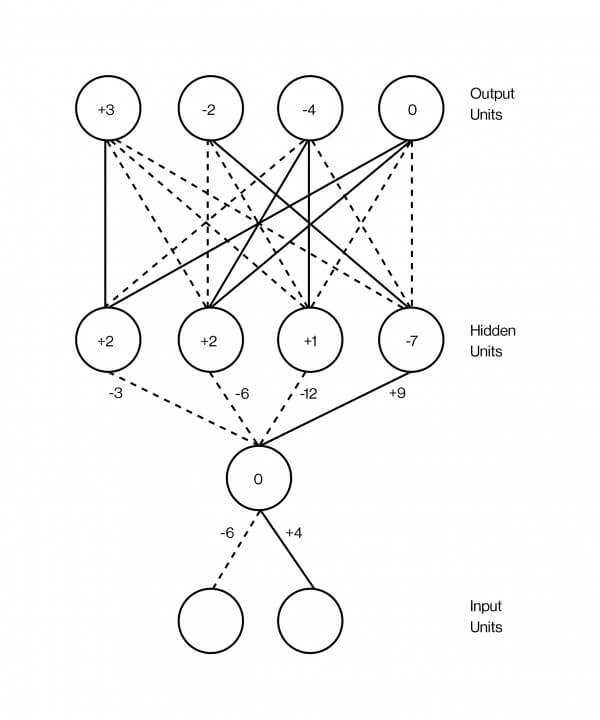

Neural network is usually depicted as a sandwich whose layers are superposed on each other. These layers contain artificial neurons, which are essentially represented by small computing units that are excited — as excited by a real neuron and transmit this excitement to other neurons with which it is connected. Excitation of a neuron is represented by a number, say, 0.13 or 32.39, which determines the degree of excitation of the neuron. And there’s another important number at each connection between two neurons, which defines how many excitations to be transmitted from one to another. This number models the strength of synapses between neurons of the brain. The higher the number, the stronger the Association, which means more excitement flows from one to another.

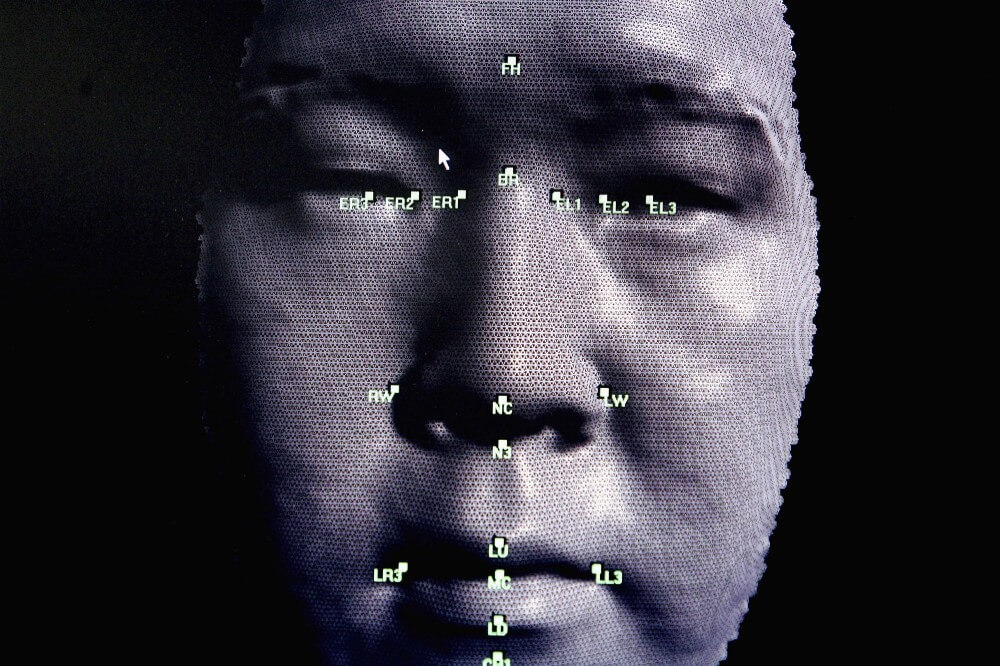

One of the most successful applications of deep neural networks is pattern recognition. Today there are programs that can recognize whether the picture of the hot dog. Ten years ago they were impossible. To make it work, first you need to take a picture. For simplicity, say it’s black-and-white image of 100 pixels by 100 pixels. You feed it to a neural network, setting the excitation of each simulated neuron in the input layer so that it is equal to the brightness of each pixel. This is the bottom layer of the sandwich: 10,000 neurons (100 x 100), representing the brightness of each pixel in the image.

Then this large layer of neurons is connected to another large layer of neurons, already above, say, a few thousand, and they, in turn, to another layer of neurons, but less, and so on. Finally, the top layer of the sandwich layer output will consist of two neurons, one representing the “hot dog” and another as “not a hot dog”. The idea is to train a neural network to excite only the first of these neurons, if the picture is a hot dog, and second, if no. Backprop, the method of error back propagation, which Hinton has built his career doing just that.

Backprop is very simple, although it works best with huge amounts of data. That’s why big data is so important to AI why they so zealously engaged in Facebook and Google and why Vector Institute decided to establish contact with four leading hospitals of Canada and to exchange data.

In this case, the data take the form of a million images, some hot dogs, some without; the trick is to mark these images as having hot dogs. When you create a neural network for the first time, connections between neurons are random weight random numbers to say how much excitation is transmitted through each connection. If the brain synapses are not yet configured. The purpose of backprop to change these weights so that the network up and running: so when you pass a picture of a hot dog on the bottom layer, the neuron “hot dog” in the topmost layer is excited.

Suppose you take the first training a picture of a piano. You transform the intensity of the pixel image 100 x 100 10,000 numbers, one for each neuron of the lower layer network. As soon as the excitation is distributed over a network in accordance with the connection strength of neurons in the adjacent layers, gradually reaches the last layer, one of the two neurons that determine the picture of the hot dog. Because it is a picture with a piano, the neuron “hot dog” needs to show zero, and the neuron is “not a hot dog” needs to show the number higher. For example, it doesn’t work like that. For example, the network was wrong about the image. Backprop is the procedure of strengthening the strength of each connection in the network, allowing you to correct the error in the example of learning.

How does it work? You start with the last two neurons and figure out how they’re wrong: what is the difference between their numbers of excitation and what it should be, really. Then you view each connection, leading to these neurons — descending lower layers and determine their contribution to the error. You keep doing this until you reach the first set of connections on the bottom of the network. At this point, you know, what is the contribution of the individual compounds in the total error. Finally, you change all the weights to reduce the chance for error. This so-called “method of back propagation of error” lies in the fact that you like to send errors back through the network, starting from the reverse end with the exit.

Incredible starts to happen when you are doing this with millions or billions of images in the network becomes well define, the picture of the hot dog or not. And what is even more remarkable is the fact that the individual layers of these networks, image recognition start to see images the same as does our own visual system. That is, the first layer detects the contours of the neurons will fire when the contours are and are not excited when they are not; the next layer defines the sets of edges, corners; the next layer begins to discern shapes; the next layer finds all sorts of items like “open rolls” or “rolls closed” because it activates the appropriate neurons. The network is organized in hierarchical layers, even without being programmed.

Real intelligence does not hesitate, when the problem is slightly different.

This is what so impressed all. It’s not so much the fact that neural networks are well klassificeret image with hot dogs: they build representations of ideas. With text, this becomes even more obvious. You can feed the text of Wikipedia, many billions of words, simple neural networks teaching her to endow every word with numbers corresponding to the excitations for each neuron in the layer. If you imagine all those numbers are coordinates in the complex space, you find a point, known in this context as a vector, for each word in this space. Then you train the network so that the words appear next on the pages of Wikipedia, will be endowed with similar coordinates — and voila, something strange is happening: words that have similar values appear close in this space. “Mad” and “frustrated” would be close by; “three” and “seven” too. Moreover, vector arithmetic allows to subtract vector “France” from “Paris”, add it to “Italy” and find “Rome” in the vicinity. No one said neural network, Rome for Italy is the same as Paris for France.

“It’s amazing,” says Hinton. “It’s shocking”. Neural networks can be seen as an attempt to take things — images, words, record conversations, medical records — and to put them in, as mathematicians say, a multidimensional vector space in which the proximity or remoteness of things will reflect the most important aspects of the real world. Hinton thinks that’s what the brain does. “If you want to know what is the idea,” he says, ” I can give it to you series of words. I can say, “John thought, “oops.” But if you ask: what is the idea? What does it mean for John to have this idea? After all, in his mind, no opening quotes, “oops”, closing quotation marks, in General this is not close. In his head runs a kind of neural activity”. Great pictures of neural activity, if you do the math, it is possible to catch in the vector space, where the activity of each neuron would correspond to a number, and each number is a coordinate on a very large vector. According to Hinton, the idea is a dance of vectors.

Now I understand why the Institute of Vector called?

Hinton creates a field of distortion of reality, you are initially given a sense of confidence and enthusiasm, inspiring confidence in the fact that for vectors, nothing is impossible. In the end, they have already created self-driving cars that detect cancer computers, instant translators of the spoken language.

And only when you leave the room, you remember that these systems of “deep learning” is still pretty stupid, in spite of their demonstrative power of thought. The computer that sees a lot of doughnuts on the table and automatically signs it as “a bunch of donuts lying on the table” seem to understand the world; but when the same program sees a girl who brushes her teeth and says it’s “boy with a baseball bat”, you realize how elusive this understanding, if it ever is.

A neural network is just a thoughtless and vague recognizers images, and how useful can be such recognizers images — because of their strive to integrate in any software — they at best represent a limited breed of intelligence that is easy to cheat. Deep neural network that recognizes the image, can be completely confused if you change one pixel, or add visual noise, imperceptible to humans. Almost as often as we find new ways to use deep learning, often we encounter its limitations. Self-driving cars can’t drive in conditions which haven’t seen before. Machines can’t make proposals that require common sense and understanding of how the world works.

Deep learning in a sense mimics what is happening in the human brain, but superficial — which may explain why his intelligence is so superficial sometimes. Backprop was not detected in the process of dipping into the brain attempts to decipher the very idea; it grew out of models of animals learning by trial and error in an old-fashioned experiments. And most of the important steps that have been made since its inception, did not include anything new on the subject of neurobiology; it was a technical improvement, well-deserved years of work, mathematicians and engineers. What we know about intelligence, nothing compared to what we don’t know yet.

David Duvant, assistant Professor of the same Department, and Hinton, University of Toronto, says that deep learning seems to engineering introduction to physics. “Someone wrote the work and said, “I made this bridge, and he stands!”. Another writes: “I made this bridge and it collapsed, but I added a support and it is worth it.” And they go crazy on the supports. Someone adds arch and all, arch is cool! With physics you can actually understand what will work and why. We have only recently begun to go to at least some understanding of artificial intelligence”.

And Professor Hinton says: “most conferences talk about the introduction of small changes instead of having to think and wonder, “Why what we do now does not work? What is the reason? Let’s focus on that.”

The view from the outside is difficult to produce, when all you see is a promotion for a promotion. But the latest progress in the field of AI, to a lesser extent was a science and more of engineering. Although we better understand what changes will improve the system of deep learning, we vaguely imagine how these systems work and whether they will ever meet something as powerful as the human mind.

It is important to understand, have we been able to extract everything you can from backprop. If Yes, then we expect a plateau in the development of artificial intelligence.

Patience

If you want to see the next breakthrough, a sort of basis for a much more flexible intelligence, you should, in theory, refer to studies similar to the study of backprop in 80 years: when smart people give up because their ideas were not yet working.

A few months ago, I visited the Center for Minds, Brains and Machines, multipurpose facility, housed at MIT, to see how my friend Eyal Dechter defends his thesis on cognitive science. Before the performance, his wife Amy, his dog ruby and his daughter Suzanne has supported him and wished him good luck.

The Eyal began his speech with a fascinating question: how is it that Suzanne, who is only two years, learned to speak, to play, to follow the stories? In the human brain such that it allows him to study well? Learn whether the computer will ever learn so quickly and smoothly?

We understand new phenomena, in terms of things we already understand. We break the domain into pieces and study the pieces. The Eyal is a mathematician and programmer, he thinks about the task — for example, make a soufflé — how about complex computer programs. But you don’t learn to make a souffle by memorizing the hundreds of tiny instructions like “turn the elbow 30 degrees, then look at the countertop, then pull your finger, then…”. If you were to do that in every new case, training would be intolerable and you would be stopped in development. Instead, we see the program steps of the highest level like “whip the egg whites”, which themselves consist of subprograms like “break the eggs and separate the whites from the yolks”.

Computers do not and therefore look stupid. To deep learning recognized hot dog, you have to feed her 40 million images of hot dogs. Band Suzanne learned of the hot dog, just show her the hot dog. And long before that it will have a understanding of the language, which goes much deeper recognition of occurrence of individual words together. Unlike the computer in her head an idea of how the world works. “It surprises me that people are afraid that computers will take their jobs,” says Eyal. “Computers can replace lawyers, not because lawyers are doing something complicated. But because lawyers are listening and talking to people. In this sense we are very far away from it all”.

Real intelligence will not hesitate, if you slightly change requirements to the solution. And the key thesis of Eyal was a demonstration that, in principle, how to make a computer run thus: alive apply all that he already knows to new challenges, quickly grasp on the fly, to become an expert in a completely new area.

In fact, this procedure, which he calls the algorithm “research-compression”. It gives the computer a function of the programmer collecting a library of reusable modular components that allow you to create more complex programs. Knowing nothing about new domain, the computer tries to structure the knowledge of him, simply studying his consolidating discovered and then studying like a child.

His Advisor, Joshua Tenenbaum, one of the most cited researchers in AI. The name of Tenenbaum pop up in half of the conversations I’ve had with other scientists. Some of the key people at DeepMind — team AlphaGo, who beat the legendary world champion at the game of go in 2016 — he worked under him. He is involved in a startup that is trying to give self-driving cars, intuitive understanding of basic physics and intentions of other drivers to better anticipate those occurring in situations not previously faced.

The thesis of Eyal has not yet been applied in practice, even in programmes were not introduced. “Problems on Eyal, a very, very difficult,” says Tenenbaum. “We need to get passed a lot of generations.”

When we sat down to drink a Cup of coffee, Tenenbaum said that explores the history of backprop for inspiration. For decades, backprop was a manifestation of the cool mathematics, for the most part capable of nothing. As soon as computers became faster, and harder, everything changed. He hoped that something similar will happen with his own work and works of his students, but “it may take another couple of decades.”

As for Hinton, he is convinced that overcoming the limitations of the AI associated with the creation of a “bridge between computer science and biology”. Backprop, from this point of view, was a triumph of biologically inspired computing; the idea originally came not from engineering and from psychology. So now Hinton is trying to repeat this trick.

Today neural networks are composed of large flat layers, but in the human neocortex, these neurons are arranged not only horizontally, but also vertically, in columns. Hinton knows why we need such columns in vision, for example, they allow to recognize objects even if you change point of view. So he creates an artificial version — and calls them “capsules” to test this theory. Yet it does not work: capsule is not particularly improved the performance of its networks. But 30 years ago bactrobam was the same.

“That should do it,” he says about the theory of capsules, laughing at his own bravado. “And what does not work yet, this is only a temporary annoyance.”

Materials Medium.com

Artificial intelligence Geoffrey Hinton: the father of “deep learning”

Ilya Hel