A team of researchers from MIT has developed an artificial intelligence system that can fool human judges into thinking it’s a person when it comes to drawing unfamiliar letter-like characters.

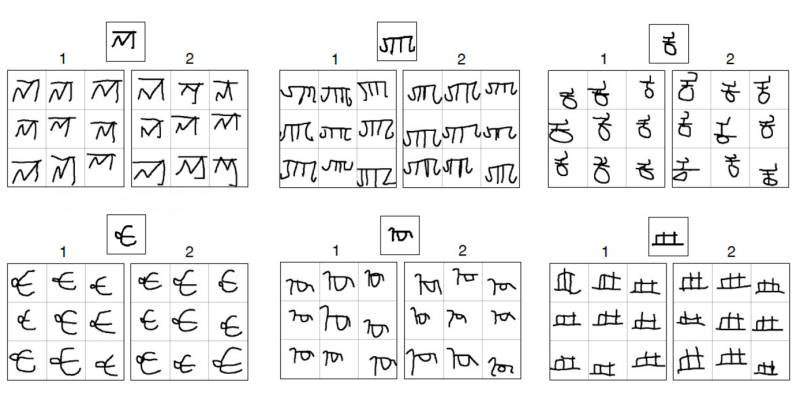

You can think of the experiment, detailed in the new issue of Science, as a kind of visual Turing Test. The software and a human are shown a new character—something that looks like a letter, but isn’t quite. (You can see some examples in the image above.) Then, they were both asked to produce subtle variations on character. In other tests, the human and computer were instead supplied with a series of unfamiliar characters and asked to produce a new one that fit with the batch.

A team of human judges was then asked to work out which results were produced by computer, and which by humans. Across all the tasks, the judges could only identify the AI’s efforts with about 50 percent accuracy—That’s the same as chance.

(Think you can do better than the judges? In the image at the top, a panel of nine shapes was produced by either the AI or a human for each character. Can you identify which panel was generated by a machine? The answers are at the bottom of the page.)

It may seem like a strange experiment, but it has some profound implications. Usually, you see, AI systems have to be trained on massive data sets before they can perform a task. Unlike computers, humans can carry out what the researchers refer to as “one-shot learning” with comparative ease.

Sponsored

The researchers suggest that they’ve created an AI that can do the same, using a technique called Bayesian Program Learning. That identifies and learns characters with an approach that’s similar to the way humans understand concepts. The team explains how the software works:

Whereas a conventional computer program systematically decomposes a high-level task into its most basic computations, a probabilistic program requires only a very sketchy model of the data it will operate on. Inference algorithms then fill in the details of the model by analyzing a host of examples.

Here, the researchers’ model specified that characters in human writing systems consist of strokes, demarcated by the lifting of the pen, and that the strokes consist of substrokes, demarcated by points at which the pen’s velocity is zero.

Armed with that model, the system then analyzed hundreds of motion-capture recordings of humans drawing characters in several different writing systems, learning statistics on the relationships between consecutive strokes and substrokes as well as on the variation tolerated in the execution of a single stroke.

The results seems to speak for themselves—and the researchers aren’t too shy about hiding their excitement. “In the current AI landscape, there’s been a lot of focus on classifying patterns,” says Josh Tenenbaum, one of the researchers, in a press release. “But what’s been lost is that intelligence isn’t just about classifying or recognizing; it’s about thinking. This is partly why, even though we’re studying hand-written characters, we’re not shy about using a word like ‘concept.’ Because there are a bunch of things that we do with even much richer, more complex concepts that we can do with these characters. We can understand what they’re built out of. We can understand the parts.”

[ Science, MIT, New York Times]

Answer: The grids produced by AI were, by row,: 1,2,1;2,1,1.