The famous physicist Richard Feynman once said: “What I cannot create, I do not understand. Learn how to solve each problem that was already solved”. The scope of neuroscience, which is increasingly gaining momentum, took Feynman’s words to heart. For neuroscientists, theorists the key to understanding how the intelligence will be his recreation inside of your computer. Neuron for the neuron, they are trying to restore the neural processes that give rise to thoughts, memories or feelings. Having a digital brain, scientists are able to test our current theory of knowledge or to explore the parameters that lead to disruption of brain function. As suggested by philosopher Nick Bostrom of Oxford University, an imitation of human consciousness is one of the most promising (and hard) ways to recreate — and surpass — human ingenuity.

There’s only one problem: our computers can’t cope with the parallel nature of our brains. In polutorachasovom body twisted more than 100 billion neurons and trillions of synapses.

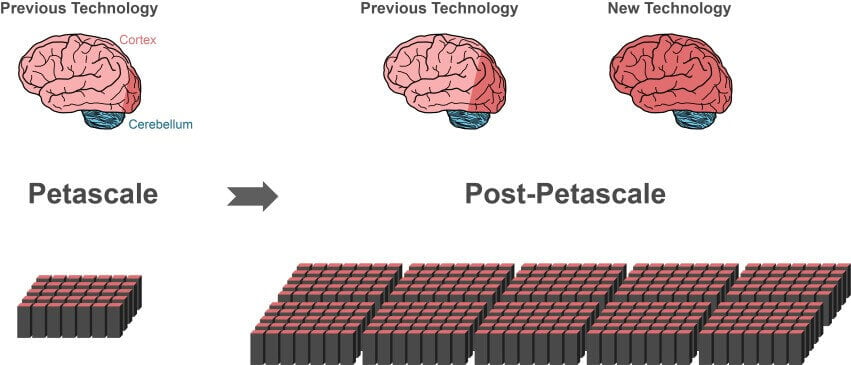

Even the most powerful supercomputers today are behind these scales, like the K computer of Advanced Institute for computational science in Kobe, Japan, can process no more than 10% of the neurons and their synapses in the cortex.

Part of the slack associated with the software. Becomes the faster computer equipment, the more the algorithms become the basis for a full simulation of the brain.

This month an international group of scientists has completely revised the structure of popular algorithm of simulation by developing a powerful technology that radically reduces the computation time and memory usage. The new algorithm is compatible with different kinds of computing equipment, from laptops to supercomputers. When future super-computers come on the scene — and they are 10-100 times more powerful than a current — algorithm is immediately applied on these monsters.

“Thanks to new technology we can use the growing parallelism in modern microprocessors is much better than before,” says study author Jacob Jordan, of the Research center Julia in Germany. The work was published in Frontiers in Neuroinformatics is.

“This is a crucial step towards the creation of technology to achieve the simulation of networks throughout the brain,” the authors write.

The problem of scale

Modern supercomputers consist of hundreds of thousands of subdomains nodes. Each node contains multiple processing centers, which can support a handful of virtual neurons and their connections.

The main problem in the simulation of the brain is how to effectively represent the millions of neurons and their connections in these centers to save on time and power.

One of the most popular simulation algorithms — Memory-Usage Model. Before scientists simulate changes in their neural network, they must first create all of these neurons and their connections in the virtual brain using the algorithm. But here’s the catch: for each pair of neurons, the model stores all the information about connections at each node, which is the receiving neuron — postsynaptic neuron. In other words, the presynaptic neuron, which sends electrical impulses, shouting into the void; the algorithm must determine where a particular message, looking solely at the receiving neuron and the data stored in the node.

It may seem strange, but this model allows all nodes to build its part of the work in neural networks in parallel. This dramatically reduces the download time, which partly explains the popularity of this algorithm.

But as you may have guessed, there are serious problems with scaling. The sender node sends a message to all host of neural nodes. This means that each receiving node must sort each message in the network — even those that are designed for units located in other nodes.

This means that a huge part of the message is discarded at each node, specifically because there is no neuron to which it is addressed. Imagine that the post office sends all employees in the country to carry a desired message. Crazy inefficient, but it works as the principle model of memory usage.

The problem becomes more serious with the growth of the size of the simulated neural network. Each node needs to allocate storage space for the memory “address book” that lists all neural inhabitants and their relationships. In the scale of billions of neurons “address book” becomes a huge swamp of memory.

The size or source

Scientists have cracked the problem by adding the algorithm… index.

Here’s how it works. The receiving nodes contain two pieces of information. The first is a database that stores information about all the neurons-the senders that connect to the nodes. Since synapses are of several sizes and types, which differ in memory usage, the database also sorts your information depending on the types of synapses formed by the neurons in the node.

This setting is much different from previous models in which the associations were sorted from the incoming source of neurons, and the type of synapse. Because of this, the node will no longer have to support the “address book”.

“The size of the data structure thus ceases to depend on the total number of neurons in the network,” explain the authors.

The second block stores data about the actual connections between the receiving node and the sender. Like the first unit, it organizes data according to the type of synapse. In each type of synapse are separated from the data source (the sending neuron).

Thus, this algorithm is specific to its predecessor: instead of storing all the connection information in each node, the receiving nodes store only those data which correspond to virtual neurons in them.

The researchers also gave each of the sending neuron to the target address book. During transmission, data is split into pieces, each fragment that contains code, postal code, sends it to the appropriate receiving nodes.

Fast and smart

The modification worked.

During testing, the new algorithm showed themselves much better than their predecessors, in terms of scalability and speed. On the supercomputer JUQUEEN in Germany, the algorithm worked by 55% faster than previous models on the random neural network, mostly thanks to its straightforward scheme of data transmission.

In a network the size of half a billion neurons, for example, simulation of one second of biological events took about five minutes of work time on JUQUEEN new algorithm. Model-predecessors took in six times more time.

As expected, several scalability tests showed that the new algorithm is much more efficient in the management of large networks, as it reduces the processing time of tens of thousands of transfers data three times.

“The focus now is on accelerating the simulation in the presence of various forms of network plasticity,” concluded the authors. With this in mind, finally, digital human brain may be within reach.

The new algorithm has brought us to a full simulation of the brain

Ilya Hel